Abstract

When we solve problems under time pressure or when there is a lot of uncertainty, we tend not to use strict logical reasoning. Instead, we tend to resort to one or more mental shortcuts, also known as heuristics, to solve the problem. The upside of using heuristics is that they allow us to make decisions quickly, whereas going through all the steps of strict logical reasoning can be exhausting and time consuming. The downside is that heuristic reasoning can lead to specific types of errors in our decision making. Studies have shown that both experts and non-experts use heuristics in solving problems in every walk of life, including medicine, business, politics, law enforcement, and even in science. Researchers have also identified multiple different heuristics. In this article, we will focus on three of the most extensively studied heuristics and show how they can affect real-life, and even life-or-death, decisions.

A Demonstration of Heuristics in Action: Anchoring and Adjustment Heuristic

We humans like to think of ourselves as rational beings. However, research has shown that we do not always follow strict logical reasoning to solve problems. Instead, we sometimes use mental shortcuts, which are called heuristics. Heuristics research has greatly changed our understanding of how people solve problems.

The following example shows how heuristic thinking works. Take a good look at the painting in Figure 1. How much do you think it is worth? You probably do not know much about the painting. So, you are being asked to solve a problem, in this case estimating the value of a painting, under a great deal of uncertainty. But do your best anyway. Write down your answer before you read on.

![Figure 1 - Interchange (sometimes referred to as Interchanged), the 1955 abstract painting by Willem de Kooning [1].](https://www.frontiersin.org/files/Articles/1274085/frym-12-1274085-HTML/image_m/figure-1.jpg)

- Figure 1 - Interchange (sometimes referred to as Interchanged), the 1955 abstract painting by Willem de Kooning [1].

- How much do you think this painting is worth?

Now here is a new piece of information: The painting is owned by a private art collector who bought it from another private art collector. Once again, estimate the value of the painting and write it down. Even with this new information, you still do not have much information to help you solve the problem, right?

Did your new estimate differ from your first estimate? If so, by how much and why? If you are like most people, you probably came up with a higher estimate the second time around because you figured that private art collectors tend to be rich, and therefore they are unlikely to own, or want to own, a cheap painting. In other words, most people adjust their initial estimate (or the mental state at which they were initially positioned or “anchored”) to come up with a new estimate that takes new information into account.

If your estimate followed this pattern, congratulations, you just used a well-known mental shortcut called the anchoring and adjustment (AAA) heuristic to solve the problem! This heuristic has this name because, to arrive at an estimate, people take an initial “anchored position” about the value of the painting, and then they adjust it until they are satisfied that they have an estimate that accounts for all the information they have about the problem.

Of course, there is nothing inherently wrong with using this shortcut. After all, there was very little information to go on. Faced with this situation, you did what most people do: You used an automatic mental shortcut or heuristic—in this case, the AAA heuristic—to estimate the value of the painting. When you made your estimates, chances are that you did so quite quickly. This is one advantage of using heuristics. Heuristics allow us to reach a conclusion quickly and without much effort. This is the upside of using heuristics.

What is the downside of using the AAA heuristic? To answer this question, compare your estimates to the actual value of the painting: $300 million! If you are like most people, chances are that you vastly underestimated the value of the painting. Studies have shown that this occurs because people’s anchoring position is almost always a significant underestimate or overestimate, depending on the problem, so that even the adjustment will not be enough to correct the initial error. Thus, using the AAA heuristic to make decisions will result in a type of error called bias. Salespeople often use the AAA heuristic to their advantage by presenting a high initial price, so when they later make a lower offer, that offer is more appealing (Figure 2A).

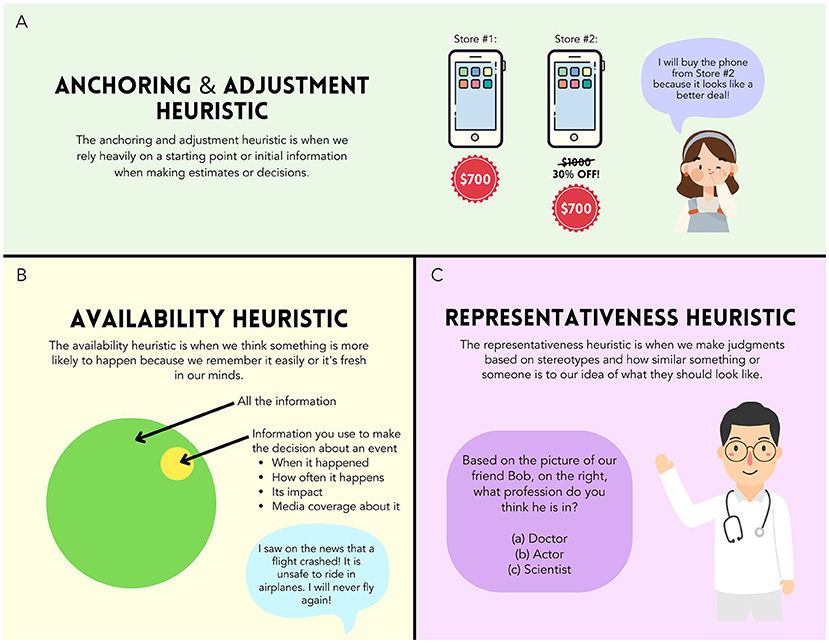

- Figure 2 - The three most important heuristics.

- (A) The AAA heuristic describes the tendency to rely heavily on a mental starting point or initial information when making estimates and decisions. (B) The availability heuristic describes the tendency to conclude that a scenario is more likely because it readily comes to mind or is mentally “available”. (C) The representativeness heuristic describes making judgments based on how similar something or someone is to our idea of what they should be like.

Discovery of the AAA Heuristic

The AAA heuristic was discovered in 1974 by Israeli American psychologists Amos Tversky (1937–1996) and Daniel Kahneman (1934–2024). They asked two groups of high school students to solve a math problem within 5 s [2]. The first group had to find the product of 1 × 2 × 3 × 4 × 5 × 6 × 7 × 8, while the second group had to find the product of the same eight numbers presented in the reverse order: 8 × 7 × 6 × 5 × 4 × 3 × 2 × 1. In what respects is this experiment similar to, and different from, the demo above and the example in Figure 2A?

The median (or the mid-point) of the responses of the first group was 512, and that of the second group was 2,250, whereas the correct answer was 40,320. How did this happen? Tversky and Kahneman reasoned that, to answer rapidly, the students quickly multiplied the first few numbers in the sequence and guessed the rest. This approach leads to underestimating the actual answer for both groups. But the median was lower for the first group than for the second group, because the first steps of multiplication (or the anchored position) is lower for the ascending sequence than for the descending sequence (1 × 2 ×… vs. 8 × 7 ×…). Again, both the answers were underestimates because both the anchored positions were underestimates. The final answer was lower for the first group than for the second group because the initial, anchored position of the subjects was likely lower for the first group than for the second group.

Tversky and Kahneman used the term “heuristics” to refer to AAA and other mental shortcuts because these shortcuts involve solving a problem using a heuristic, or trial-and-error, approach, as described for the AAA heuristic. This term has stuck, in no small part because of the profound influence that these scientists have had on our understanding of how people solve problems.

There are Many Different Heuristics

There are many other heuristics in addition to the AAA heuristic. Tversky and Kahneman also discovered two more heuristics that are highly important in the real world [2, 3]. One of these is called the availability heuristic. This is a mental shortcut our brains use to make decisions based on how easily certain examples or instances come to mind or become mentally “available”. For instance, if we do not hear about a particular event, we tend to assume that it is rare. For example, a doctor may fail to correctly diagnose a disease because they have not encountered it lately. Conversely, if something readily comes to mind for whatever reason (for instance, shark attacks or violent crime), we tend to assume that it happens all the time (Figure 2B).

Another major heuristic discovered by Tversky and Kahneman is the representativeness heuristic. This is the mental shortcut we use when we judge how likely an event is to happen based on how “representative” it is, or how well it fits into our existing ideas [2, 3]. This heuristic allows us to make predictions and draw conclusions without analyzing every piece of available information, and instead rely on the similarities and patterns we have seen in the past. For instance, we may vote for someone for president because they look or act “presidential”. Or we may assume that someone is a criminal because they fit our notions about what a criminal looks like or acts like (Figure 2C).

Other researchers have reported dozens of other heuristics. For example, affect heuristic is when a decision is driven by emotion (also called affect), such as when we make decisions because they feel good. Familiarity heuristic is when we assume that our past experiences can reliably predict the future. For instance, we may decide that the stock market will go up by 1,000 points this year because it went up by 1,000 points last year. Researchers also debate whether all these heuristics are distinct from each other, or simply variations of a relatively small number of underlying heuristics.

Heuristics Can Have Life-Or-Death Consequences

In addition to the real-life examples we have noted, heuristics can even play a significant role in life-or-death decisions, from law enforcement to medicine, from national security to military combat. Decision making in law enforcement can reflect biases stemming from the use of one or more heuristics [4, 5]. For instance, police officers, lawyers, juries, or judges may treat some minority groups far more harshly because of biases resulting from the representativeness heuristic, availability heuristic, or both [5]. Similarly, doctors can miss early signs of heart attack in women much more often than in men because heart attack symptoms in women often look different than the “typical” symptoms the doctors are trained to look for [3, 6]. Similarly, some studies have shown that doctors over-diagnose cancer because they fail to properly account for the how often cancer occurs in the patient population, which is a form of the representativeness heuristic [6].

What We Know About Heuristics and What Remains to Be Discovered

Heuristics have been studied extensively for more than half a century now, as examples of the limits of rational thinking, or instances in which human beings do not always follow strict logical reasoning [7]. These studies have shown that using heuristics is a natural function of the human mind, and not a rare, special-case scenario. Second, people tend to use heuristics when they must solve problems under time pressure, uncertainty, risk, and/or when the problem is complicated. Third, both experts and non-experts use heuristics. This means that while we might be able to train ourselves to minimize the downsides of heuristics, we cannot fully or permanently “train away” the habit of using heuristics. Finally, people use heuristics when solving many different types of problems. That is, decision makers in important arenas such as business, military, public policy, science, medicine, and law enforcement all use heuristics sometimes. Not surprisingly, the importance of heuristics research has been recognized by many awards. Most notably, Herbert Simon, Daniel Kahneman, and Richard Thaler have each received the Nobel Prize (in 1978, 2002, and 2017, respectively) for their heuristics-related research [8].

So far, heuristics research has mostly focused on understanding precisely how each of the known heuristics work. Much of this research has been carried out under controlled laboratory conditions, where researchers have tended to study each heuristic on its own, often using imaginary problems. But the problems in the real world are typically more complex. For one thing, multiple heuristics, not just one, often come into play at the same time. Thus, translating the current understanding of heuristics to the real world is an important area of future research.

Glossary

Rational: ↑ Based on, or reliant upon, logical thinking.

Anchoring and Adjustment (AAA) Heuristic: ↑ When we rely heavily on a starting point or initial information when making estimate or decisions.

Bias: ↑ A preference toward a particular perspective, usually leading to unfair judgment.

Risk: ↑ Chance that a decision may lead to some type of bad outcome.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

VA and AC were supported by an educational grant to JH by the Undergraduate Research Apprenticeship Program of the U.S. Army Educational Outreach Program (AEOP). Research in JH’s laboratory has been supported by the Army Research Office (ARO) grant #W911NF-15-1-0311 to JH. Figure 2 was created by VA.

References

[1] ↑ Rebolledo, C. 2023. 10 of the Most Expensive Paintings Sold Over the Past Decade and the Artists Behind Them. Singulart Magazine. Available online at: https://www.singulart.com/en/blog/2023/04/25/10-of-the-most-expensive-paintings-sold-over-the-past-decade-and-the-artists-behind-them/ (accessed June 29, 2023).

[2] ↑ Tversky, A., and Kahneman, D. 1974. Judgment under uncertainty: heuristics and biases. Science 185:1124–1131. doi: 10.1126/science.185.4157.1124

[3] ↑ Lewis, M. 2016. The Undoing Project: a Friendship That Changed Our Minds, 1st Edn. New York, NY: W.W. Norton & Company.

[4] ↑ Stark, J. 2021. Addressing Implicit Bias in Policing. Police Chief Online. Available online at: https://www.policechiefmagazine.org/addressing-implicit-bias-in-policing/?ref=918e82aeaa073d4a198c3ce0c6571345 (accessed July 2, 2023).

[5] ↑ Oldfather, C. 2007. Heuristics, Biases, and Criminal Defendants. Marquette Law Review. Available online at: https://scholarship.law.marquette.edu/cgi/viewcontent.cgi?referer=&httpsredir=1&article=1134&context=mulr (accessed July 3, 2023).

[6] ↑ Marewski, J. N., and Gigerenzer, G. 2012. Heuristics in decision making in medicine. Clin. Res. 14:77–89. doi: 10.31887/DCNS.2012.14.1/jmarewski

[7] ↑ Gardner, J. L. 2019. Optimality and heuristics in perceptual neuroscience. Nat. Neurosci. 22, 514–523. doi: 10.1038/s41593-019-0340-4

[8] ↑ Kahneman, D. 2013. Thinking, Fast and Slow. New York, NY: Farrar, Straus and Giroux.