Abstract

Many people cannot communicate because of their physical problems, such as paralysis. These patients cannot speak with their friends, but their brains are still working well. They can think by themselves and would benefit from a device that could read their minds and translate their thoughts into audible speech. In our study, we placed electrodes beneath patients’ skulls, directly at the surface of the brain, and measured brain activity while the patients were thinking. We then tried to decode and translate the words that they imagined into audible sounds. We showed that we could decode some parts of the sound of what patients were thinking. This was our first attempt at translating thoughts to speech, and we hope to get much better, as many patients who cannot speak but have thoughts in their minds could benefit from a “speech decoder.”

Many people suffer from severe paralysis, which is a problem with moving their arms and legs, and some of them cannot communicate what they want to say despite being fully aware and having words in their minds. These people are prisoners of their own bodies. Their brains are fully functional and they can think normally, but their bodies are not responding anymore. These patients would benefit from a device that could read their minds, translate their brain activity, and speak out loud the words they cannot say. In this study, we tried to decode what people think, as a first step toward building a mind-reading device that can speak out loud the words people wish to say. Cool, right?

But, building such a device is very challenging for scientists, as several complicated elements need to be figured out. First, we need to record people’s brain activity using the latest technology available (Figure 1A). Second, we also need to understand how speech is stored and processed in the brain (Figure 1B). Then, to understand how the speaking brain works, we need mathematical tools (Figure 1C) to translate the brain activity into speech that is understandable and audible to everyone (Figure 1D).

- Figure 1 - Decoding method.

- A. The brain electrical activity is recorded using electrodes (red circles) placed directly at the surface of the brain, beneath the skull. B. Thoughts are mapped to the brain activity. C. Mathematical tools are used to decode and translate the brain electrical activity into understandable speech. D. The decoded speech is played out loud.

Our approach is a little bit like translating a foreign language into your own language. For instance, if you do not speak French, but you want to understand what your friend is saying in French, what do you need? You need a translator. After many years of studying these two languages, we know how they relate to each other. With the help of some language rules, a translator can map French words to English words, eventually allowing you to understand what your friend is saying. In our study, we want to translate brain activity generated while thinking into audible and understandable speech sounds.

What are Exactly Speech Sounds?

In everyday life, we exchange thoughts and ideas through language and communication. During these conversations, we listen to people, we think about the topic in question and produce speech to reply and interact with people. This process is highly complex, and researchers have been working for years to understand how language is encoded in the brain [1].

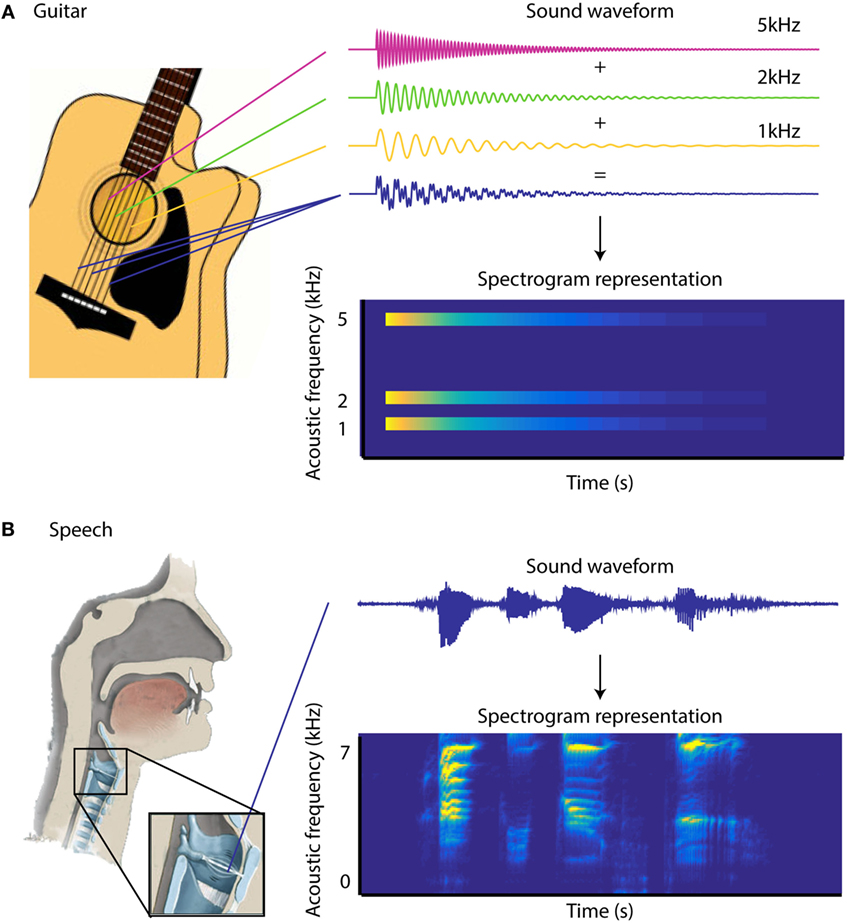

When we speak out loud, we produce sounds. A sound is a wave, and sound waves can be formed from many sources, such as a dog barking, a tree falling, a thunder from a storm, or a person speaking. A sound wave travels through the air and you hear a sound when the sound wave reaches your ear and vibrates your eardrum. For instance, when a guitar is plucked, a sound wave is created and travels through the air. The fact that we perceive sounds of different pitch is because sound waves have different frequencies (Figure 2). When the string “A” of the guitar is plucked, it results in a low pitched sound. This is because the chord vibrates at low frequency. On the other hand, when the string “B” of the guitar is plucked, it results in a high pitched sound, because the chord vibrates at a higher frequency. When three chords are plucked at the same time, the resulting sound wave is the sum of all three individual sound waves. Speech production is similar. When someone speaks out loud, one sound is heard by the listener. However, this sound is composed of several frequencies that vary over time. In particular, a man who speaks with a low pitched voice produces sound waves that are composed of low frequencies, whereas a woman who has a high pitched voice produces sound waves that are composed of higher frequencies. We all have different “voice boxes” that have different mechanical properties and produce speech of different pitches.

- Figure 2 - Frequency decomposition of sound.

- A. Examples of sound waves when different individual guitar strings are plucked and when several different stings are plucked at the same time. Lower panel: spectrogram representation of the blue sound wave. B. Example of a speech sound wave and its corresponding spectrogram representation.

Thinking is the action of using your mind to produce ideas, make decisions, recall memories, and communicate with others. We all think during the day and have this little voice inside our head that guides us. When we think, we can hear words in our heads; we can perceive different pitches, different voices, and different sounds inside our heads, without actually hearing the sounds. This suggests that we have internal representations of speech very similar to when we speak out loud. To understand how these speech sounds are produced (out loud or in our heads), we need to look at the brain activity.

Recording Brain Activity

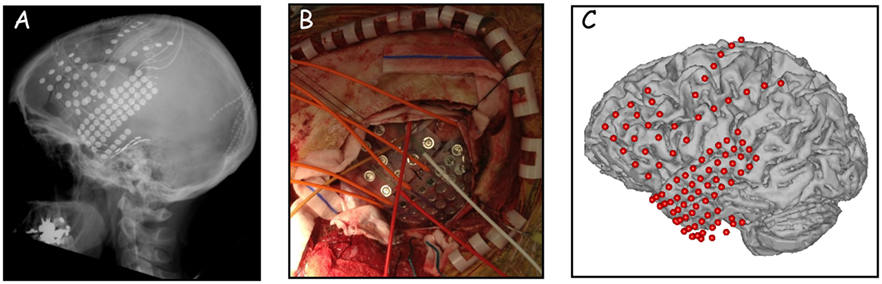

To perform this first study, we recorded the electrical activity in people’s brains by placing electrodes beneath their skulls, directly at the surface of the brain (Figure 3). This technique is called electrocorticography, and it is risky, since it requires surgery. That is why we only use this technique on patients that need brain surgery. But, which patients exactly? In most cases, they are epileptic patients. You may know someone in your family or at school who suffers from epilepsy and has sudden uncontrolled and violent shaking of the body, resulting from repeated muscle contraction and relaxation, called a seizure. Seizures (sometimes called convulsions) happen when there is unusual electrical activity in the brain. In some cases, medication does not work to control the seizures, and the only way to treat the seizures is to find and remove the part of the brain that is malfunctioning and causing the seizures. To localize exactly which part of the brain causes the seizures, neurosurgeons implant these electrodes in the brain for 1 or 2 weeks. We take advantage of this time to do our experiments with patients (if they agree to it, of course), to investigate how the brain works when we say words in our heads.

- Figure 3 - Placement of electrodes.

- A. X-ray of electrode placement. B. Surgical placement of electrodes on the surface of the brain. C. Electrode positions shown on a 3-D model of the brain.

So, do we know how the brain works when we are saying words out loud and in our heads?

Understand How the Speaking Brain Works

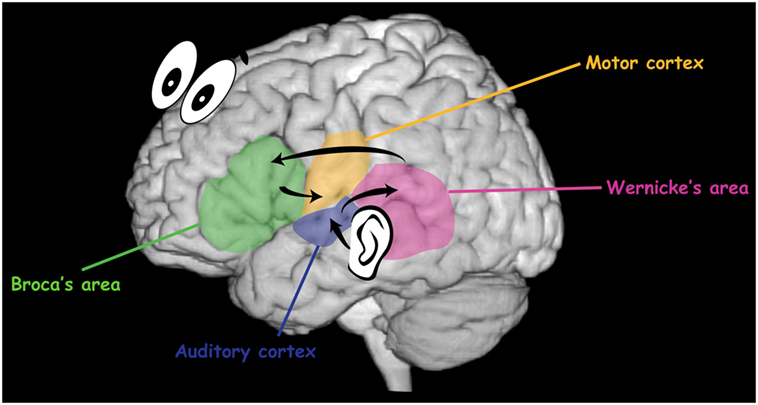

Several parts of the brain are involved in hearing, understanding, and producing speech. Each of these areas interacts with the others through a connected network of neurons or brain cells. These neurons generate electrical activity when activated, and they connect with each other to form a network through which they can “talk” to each other. First, the auditory (hearing) system analyzes the speech sounds in the temporal part of the brain, shown in blue in Figure 4. Then, Wernicke’s area (pink) is responsible for understanding the speech sounds. Finally, Broca’s area (green) is involved in planning a speech response and then transmitting the information to the motor cortex (yellow), which is the part of the brain that controls movement. The motor cortex then coordinates the small muscles in your throat, mouth, and lips that produce the speech we hear (also called articulators).

- Figure 4 - Organization of speech in the brain.

- The auditory cortex is involved in hearing sounds. Wernicke’s area is involved in understanding the speech sounds, whereas Broca’s area is involved in planning a speech response. Finally, the motor cortex is responsible for coordinating the muscles responsible to produce words.

When we think, the language areas of our brain are active. Speaking out loud or speaking in your head involves overlapping mechanisms and brain areas. In particular, the auditory cortex, Wernicke’s area, and Broca’s area are active in both speaking out loud and speaking in your head. What happens in the brain is not exactly the same in both cases, and one of the major differences is that the motor cortex is much more active when speaking out loud than when speaking in your head. This is a good thing, or you might say things out loud that you do not want to say to someone, like “your haircut is weird!”

For instance, if you say the word “baseball,” this will generate a well-defined pattern of brain activity, like a signature on a piece of paper. Every time you say the same word, it will generate the exact same pattern of brain activity. On the other hand, if you say the word “horse,” it will generate a slightly different pattern of brain activity. Similarly, when you think about the word “baseball,” this will generate a brain response similar to the one created when you say this word out loud. Once we learn what the pattern of brain activity for certain words looks like, we can predict which word was said by looking at the pattern. In other words, we can decode the brain activity and reconstruct some aspects of the speech sounds.

Speech Decoder

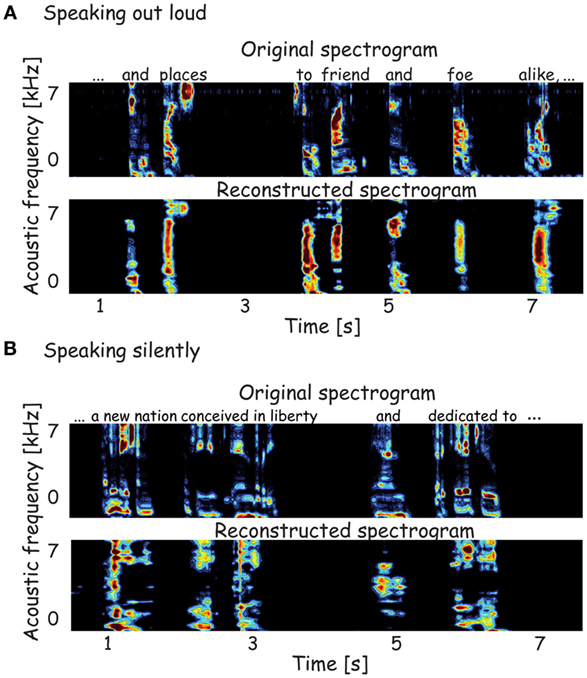

This study was divided into two parts. In the first part, we decoded speech produced out loud. In the second part, we decoded speech produced silently in the patient’s head.

In the first part, we recorded brain activity while people were speaking out loud. Then, we built a decoding device on a computer that was able to reconstruct some aspects of the sound spoken out loud from the brain activity [2].

Wait a second. Which aspects of speech sounds were decoded exactly?

Remember, sounds are composed of a mixture of different frequencies. Using a mathematical formula, it is possible to isolate each specific frequency present in a given sound. The result of breaking down the sound in this way is called a spectrogram representation. It is this spectrogram representation that we decoded in this study. Why did we decode each individual frequency, rather than the mixture of frequencies present in the whole sound? The reason is that this is how the brain process sounds, by analyzing each individual frequency, rather than the mixture of them.

Decoding speech produced silently in the patient’s head was trickier. Decoding thoughts is more difficult than decoding speech produced out loud. The reason for this is that we do not know exactly how and when the brain activity generated while thinking maps to speech sounds, given that there is no sound produced during thinking. So, we have to figure out how to tell when a person is thinking, which is a tough problem. We have a pretty good idea of which part of the brain is active, but we cannot say precisely which signals are associated with specific speech sounds. So, what did we do to decode thoughts? We used another strategy, based on the fact that speaking out loud and speaking in your head involve partially overlapping brain mechanisms. We used the model that could decode words that were spoken out loud, and applied this decoding model to brain data generated while thinking. Using this technique, we were able to reconstruct some aspects of the speech sounds that corresponded to the sounds that would be produced if the words were spoken out loud.

Results

Our results showed that we could partially decode the spectrogram of what patients were thinking. An example of the original spectrogram of the spoken words and the reconstructed spectrogram of the same speech sound produced out loud by the patient and in the patient’s mind are shown in Figures 5A,B, respectively. The reconstruction is pretty accurate [3]. However, the quality of the sound was not good enough to understand exactly what the patients thought. But, this is our first attempt at doing this and we hope to get much better. We actually have to get much better since many patients who cannot speak but have thoughts in their minds will benefit from this research.

- Figure 5 - Examples of an original and a reconstructed spectrogram.

- A. Original spectrogram of the sound spoken out loud and spectrogram reconstructed from the brain activity while the patient was speaking out loud. B. Original spectrogram of the sound spoken out loud and spectrogram reconstructed from the brain activity while speaking silently (thinking).

This work shows us that it may eventually be possible to implant a speech decoder into patients with devastating neurological disorders that affect their language ability, thus restoring their ability to speak. Can you imagine how great it would be if we could help a patient say “I am hungry” or “I love you”? That is why science is so much fun, we can help people to have better lives.

Glossary

Electrocorticography: ↑ A type of brain activity monitoring that uses electrodes placed directly on the exposed surface of the brain.

Articulators: ↑ Little muscles in your throat, mouth, and lips that produce sounds.

Spectrogram: ↑ Visual representation of how much of each individual frequency is present in a given sound.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Original Article Reference

↑ Martin, S., Brunner, P., Holdgraf, C., Heinze, H. J., Crone, N. E., and Rieger, J., et al. 2014. Decoding spectrotemporal features of overt and covert speech from the human cortex. Front. Neuroeng. 7:14. doi: 10.3389/fneng.2014.00014

References

[1] ↑ Hickok, G., and Poeppel, D. 2007. The cortical organization of speech processing. Nat. Rev. Neurosci. 8(5):393–402. doi: 10.1038/nrn2113

[2] ↑ Pasley, B. N., David, S. V., Mesgarani, N., Flinker, A., Shamma, S. A., and Crone, N. E., et al. 2012. Reconstructing speech from human auditory cortex. PLoS Biol. 10(1):e1001251. doi: 10.1371/journal.pbio.1001251

[3] ↑ Martin, S., Brunner, P., Holdgraf, C., Heinze, H. J., Crone, N. E., and Rieger, J., et al. 2014. Decoding spectrotemporal features of overt and covert speech from the human cortex. Front. Neuroeng. 7:14. doi: 10.3389/fneng.2014.00014