Abstract

Artificial intelligence applications have been developing rapidly over the past few years, allowing computers to perform complex actions, such as driving without a driver, making decisions, and recognizing faces. These applications require that many calculations be performed in parallel and immense amounts of information are needed. This article demonstrates how inefficient today’s computer structure is for performing artificial intelligence applications. To deal with this challenge and improve artificial intelligence applications, we will see how inspiration from the way the human brain works will allow us to build completely new computers, which will rock the way computers have been built for many years.

Standard Computers

Computers come in different shapes and sizes, from the tiny computers in cellphones to supercomputers in huge halls called server farms. Despite the big differences in the usages and abilities of various computers, their basic structure is similar—they all contain a computation unit called a processor, and a data storage unit called memory. The computation unit performs a collection of arithmetic operations on numbers that are stored in the memory. In order to perform a complex operation, a collection of simpler operations is written as a computer program, where each operation is a command written as a line of wording in the program. For example, when we want to add two numbers, the computer will actually perform a number of operations including reading each number by bringing it from the memory to the processor, adding the numbers (performed by an electronic component that adds numbers, located inside the processor), and writing/storing the result into a specific location in the memory. The commands themselves are also stored in the memory.

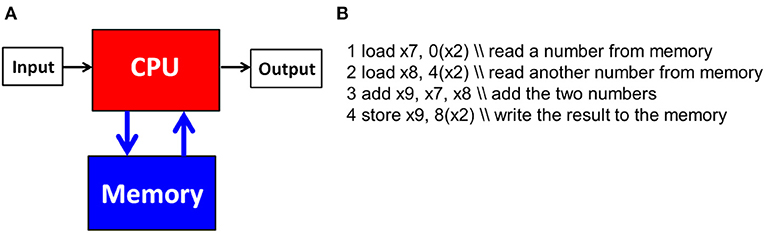

This basic computer structure was first suggested by John von Neumann from Princeton University in 1945, and therefore the basic structure is called von Neumann architecture (Figure 1) [1]. For many years, computers changed their shapes and improved their abilities, both for storage (the amount of information that can be saved), and computation (the number and complexity of operations that are performed in a certain time period). However, the basic structure and principles have not changed since 1945. In today’s computers, the processors perform arithmetic operations very quickly. A modern processor can perform more than one billion operations, such as addition and subtraction, in 1 second. Accessing the memory for writing and reading is much slower than processing and requires a lot of energy. Therefore, the maximum efficiency of computers is achieved in applications in which the information is available and accessible to the processor.

- Figure 1 - (A) von Neumann architecture.

- The computer is built from a control and computation unit (processor, CPU) and an data storage unit (memory). It also contains input and output units. (B) An example of a computer program adding two numbers. Commands 1 and 2 read both numbers from memory and bring them to the processor, command 3 adds the two numbers in the processor, and command 4 stores the result in memory.

Artificial Intelligence is Shuffling the Cards

In recent years, artificial intelligence (AI) applications have been increasing and can be seen in almost every field, including medicine, automotive, security, and manufacturing. The ability to connect many computers together, allowing the storage and computation of huge amounts of information, has enabled the creation of some very impressive AI. In many cases, computers outperform humans. For example, object recognition programs are recognizing objects more accurately than the average person, and automated cars are driving more safely than the human driver.

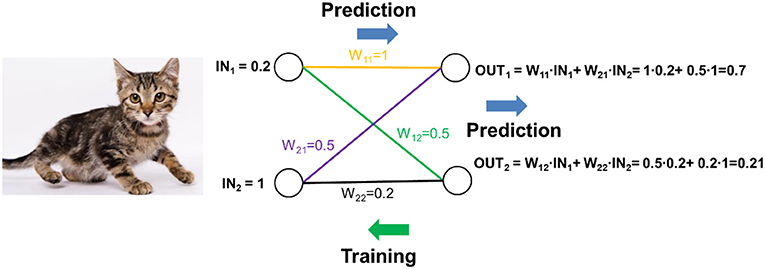

AI applications are rocking the stability of von Neumann architecture and the computational model that has held on for so many years. AI applications require both enormous amounts of data and computational models that include a huge number of different parameters (numbers) corresponding to the data. For example, to perform object identification (Figure 2), millions of images are transferred to the computer, along with the desired tagging result identifying the image (for example, the type of animal). For each example, a training process is performed, in which the result predicted by the computer is compared to the tag. Based on the difference between the desired result and the result obtained by the computer, the parameters of the computational model are updated according to mathematical rules that were defined in advance. Usually, a large error will result in a larger change in the parameters. After many examples, the parameters of the computational model will enable an accurate result.

- Figure 2 - Artificial intelligence based on artificial neural networks.

- The computer predicts whether the received image is of a cat. Each input (IN) receives a numerical value according the color of an associated pixel from the image. The inputs are connected to two outputs (OUT) through connections (called weights Wij) with a numeric value representing the strength of the connection between each input-output pair. In this example, output 1 is the probability that the image is a cat and output 2 is the probability that the image is a dog. Every output receives a value depending on the sum of all entrances and weights. Calculation shows that there is a 0.7 probability that the image is a cat and a 0.21 probability that it is a dog, therefore the prediction is that the image contains a cat. The learning process is the process of updating connection values. In complex AI systems, there are millions of weights and many thousands of inputs and outputs connected in various ways.

We can think of a computer’s memory as a warehouse or a library: its size will directly influence the time and energy required to access a specific location. Therefore, for AI applications, the memory must be extremely large, which means accessing information is slow and requires lots of energy. Additionally, predicting results and updating the parameters during training requires a huge number of arithmetic operations, on the scale of a billion addition and multiplication operations for the recognition of a single image. A normal computer requires the processor to perform arithmetic operations one after the other, and in between, the information must be moved between the processor and memory. Since AI applications must perform billions of calculations on millions of different number, standard computers are very inefficient and waste huge amounts of time and energy transferring the information, in addition to the slowness of performing many computations one after the other.

The Need for New Computers

The huge success of AI is driving computer companies to create bigger and stronger computers that can handle the huge amounts of data necessary for effective AI. To do this, graphical processing units (GPUs) are being used in addition to normal processors. GPUs were originally developed to help computers perform calculations on images. In most cases, this requires performing the same calculation operation many times, such as changing the value of all pixels in an image. In AI, GPUs are used to perform a large number (usually a few hundreds or thousands) of addition and multiplication operations in parallel (at the same time), therefore reducing the computation time compared to a standard processor that performs single operations one at a time. Many companies have started producing dedicated processors that can perform the unique operations needed by AI by adding many electronic circuits to perform addition and multiplication operations in parallel. Also, instead of using one very big memory, systems are being built with memories of different sizes and in different locations, so that data that is used many times will be located in a small, fast memory, and data that is used infrequently will be located in a big, slower memory.

Despite these attempts to modify classical computers to fit the age of AI, the computational method of AI applications is significantly different than that of the von Neumann computer. Although memories of different sizes are now being used, it is difficult to take advantage of the efficiency of small memories, so the big, slow memories are still often accessed. The new processors created for AI have significantly increased the number of computations performed within a certain time, but huge amounts of data must still be transferred to memory, so a lot of energy and time is still wasted.

Neuromorphic Computers: Computers That Work Like the Brain

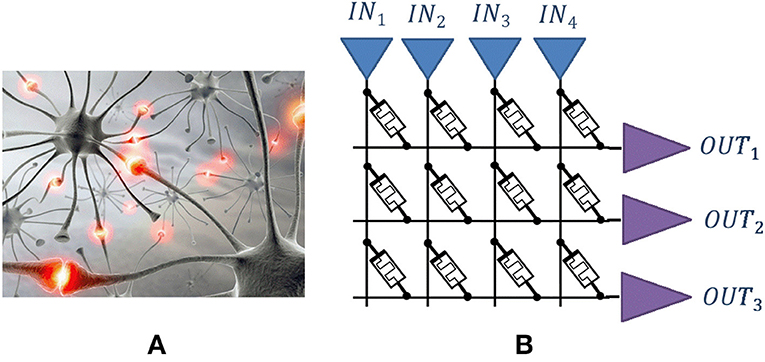

The tasks performed by AI (driving, identification, etc.) are performed in a completely different way by the human brain, and with amazing efficiency. The human brain requires less than one tenth the energy of a single processor to perform an operation that requires the connection of thousands of AI processors together. We should try to learn from the brain and build computational models and computers that are similar to the brain in the way they operate. In the brain, there is no separation between data storage and processing. All the information, expressed through electrical signals and chemical changes, is transferred between brain cells called neurons. This transfer of information happens simultaneously between billions of connected neurons. Neurons are linked by connections called synapses, which can be strengthened or weakened. Learning is basically a change in the connection strength between various neurons. Synapses are very efficient—there is no separation between the calculation of the change and storage of the connection’s strength, as there is in current AI (Figure 3).

- Figure 3 - (A) Living neurons are connected through synapses.

- (B) In a neuromorphic circuit, the neurons are connected to one another through an array of electronic components, with a varied electrical conductivity. The value of conductivity represents the strength of the connection between the neurons and is changeable and adaptable according to learning. The input signals (IN) are converted into a continuous (analog) electrical signal, which is received in each exit and converted into a digital signal. In this example, both the storage and the computation are performed by the same circuit, and not in separate units as they are in von Neumann architecture.

To match the world of computing to the needs of AI, we must build computers with a structure inspired by the brain. These computers should have no separation between storage and computation, but instead use units capable of calculating and saving the values simultaneously. Electrical circuits inspired by the brain are called neuromorphic circuits, because they have the shape (morphic) of a neuron (neuro) [2]. In neuromorphic circuits, the same electronic components are used to both perform a calculation and to store the result locally. The structure of such a computer is completely different from a von Neumann machine, and it looks like a neural network in the brain.

Recently, several computers using neuromorphic circuits have been developed [3–5], but there are still many challenges before these new computers can replace existing ones. Neuromorphic computers require new materials and devices that can combine calculation and memory, since the devices in standard computers are used only for a single purpose. Additionally, neuromorphic computers need new programs capable of giving the right instructions to the special structure. As von Neumann computer systems are increasingly combined with neuromorphic computers, AI applications will become more efficient, and computers will continue advancing toward human capabilities.

Glossary

Processor: ↑ Processor is an electronic unit which performs mathematical operations on some data inside the computer.

Memory: ↑ Memory is a device that is used to store information for immediate use in a computer.

Von Neumann Architecture: ↑ The computer structure common today, which consists of a processor and a storage (memory) unit. The processor controls the system and performs logic and arithmetic operations. The memory stores the program and the data the program reads or writes.

Artificial Intelligence (AI): ↑ “The ability to make a machine behave in a way that would be considered intelligent if a person would behave that way” (Marvin Minsky).

Graphical Processing Unit: ↑ Graphical processing unit (GPU) is a special purpose processor, originally developed in a unique structure to accelerate the creation of images in display devices. Now they are widely used also to accelerate other applications such as AI.

Neuron: ↑ Neuron or a nerve cell is an electrically excitable cell that communicates with other cells via specialized connections called synapses. It is the main component of nervous tissue in animals.

Synapse: ↑ Synapse is a structure in the nervous system that permits a neuron (or nerve cell) to pass an electrical or chemical signal to another neuron or to the target effector cell.

Neuromorphic Circuit: ↑ Electronic circuits that mimic the behavior of neurons in the human body. Neuromorphic circuits can be combined to create neuromorphic computers.

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

[1] ↑ von Neumann, J. 1993. First draft of a report on the EDVAC. IEEE Ann. Hist. Comput. 15:27–75. doi: 10.1109/85.238389

[2] ↑ Mead, C. 1990. Neuromorphic electronics systems. Proc. IEEE 78:1629–36. doi: 10.1109/5.58356

[3] ↑ Davis, M., Srinivasa, N., Lin, T. H., Chinya, G., Cao, Y., Choday, S. H., et al. 2018. Loihi: a neuromorphic manycore processor with on-chip learning. IEEE Micro 38:82–99. doi: 10.1109/MM.2018.112130359

[4] ↑ Soudry, D., Di Castro, D., Gal, A., Kolodny, A., and Kvatinsky, S. 2015. Memristor-based multilayer neural networks with online gradient descent training. IEEE Trans. Neural Netw. Learn. Syst. 26:2408–21. doi: 10.1109/TNNLS.2014.2383395

[5] ↑ Prezioso, M., Merrikh-Bayat, F., Hoskins, B. D., Adam, G. C., Likharev, K. K., and Strukov, D. B. 2015. Training and operation of an integrated neuromorphic network based on metal-oxide memristors. Nature 521:61–4. doi: 10.1038/nature14441