For dozens of years, it has been believed that the brain is organized into “sensory areas”: that is, there is a “visual area,” an “auditory area,” and so forth, and that the visual area can only process visual information. It has also been believed that if people did not have an opportunity to see for a few years early on in life (for example, because they had a rare condition that caused them to be born blind), the “visual area” loses its ability to process visual information.

We will briefly describe here a few studies that challenge this theory. Using a special method, named “sensory substitution,” researchers have been able to demonstrate that blind people could “see” using sounds. Using a brain scanner, they showed that those brain areas, thought to have lost their ability to process visual information, play a central role in the ability to “see using sound” and identify objects, faces, letters, and more [1].

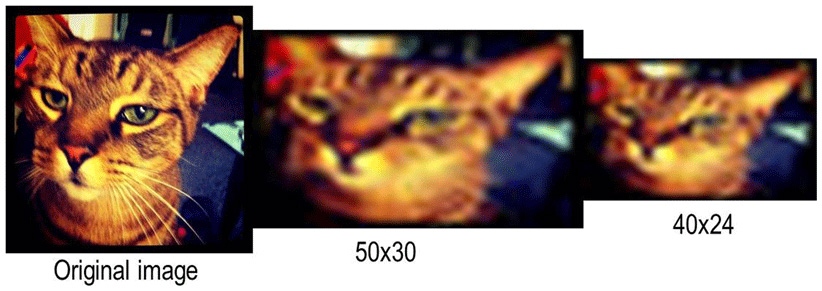

Sensory substitution is the use of one sense (for example, the sense of hearing, or the sense of touch) to deliver information that is usually delivered using another sense (for example, the sense of vision). For instance, a picture, which we usually use vision to see, can be “seen” using sounds. The EyeMusic is one example of a sensory substitution device (SSD) [2]. It takes in a picture (e.g., from your Instagram account) and translates it into sound. A pixel that appears low on the picture is played by a low-pitched musical note. A high pixel is represented by a high-pitched musical note. Each color is played by a different musical instrument. It currently represents six colors: white (choir), blue (trumpet), red (Rapman’s reed), green (reggae organ), yellow (strings), and black (represented by silence). This musical “image” is played column-by-column from left to right. So an object on the left of the image would be heard before an object on the right. The resolution can be set to 40 × 24 pixels or 50 × 30 pixels (see Figure 1).

- Figure 1 - The EyeMusic resolution.

- On the left is an image of a cat, captured on Instagram. In the middle is the same image, with a resolution of 50 × 30 pixels (the highest resolution currently afforded on the EyeMusic) and on the right is that same image with a resolution of 40 × 24 pixels (the low resolution used in the reported EyeMusic studies).

It is important to note that if you feed a picture of your cat into the EyeMusic, the device would not be outputting the word “cat,” or sound out a purr; it will rather produce a combination of sounds, from which the user will infer that this is a picture of a cat.

Our team of researchers at Prof. Amir Amedi’s Hebrew University lab has been using this device as a tool to study brain function [3]. In one cool study, we used a related device, named the vOICe. People who are legally blind were able to “see” well enough with the device, that they crossed the legal-blindness threshold. In other words, if they were – hypothetically – to use the device while taking the test that determines whether they are legally blind, they would not qualify as legally blind.

Following a training program, users of the device are able to identify objects, facial expressions, and read words (for a demonstration see, this video).

Our team also uses functional magnetic resonance imaging (fMRI). With fMRI, we can take a picture of what goes on inside the brain when the study participants do certain things. Different parts of the brain are active when we see a face, or read a word, and the images from the fMRI scanner can show what areas are active when the fMRI picture (or scan) is taken.

In one study, users of the vOICe “saw” images using sound, and then told the researchers if they thought it was a face, a body part, a letter, a texture, a house, or an everyday object. As they did this, their brains were being scanned, using an fMRI machine. The results were stunning: in blind individuals, the “visual” brain area was activated by the sounds. In other words, the “visual” area was not only “visual”! Moreover, the brain activity pattern in this “visual” area was very similar to that observed in the brains of sighted individuals. For example, there is a small area that is in charge of identifying words. Until recently, it was thought that only visual letters activated it. When blind participants read words with the SSD, this area was also activated. In fact, it was even activated in people who were born blind, and have therefore never seen a written word [4].

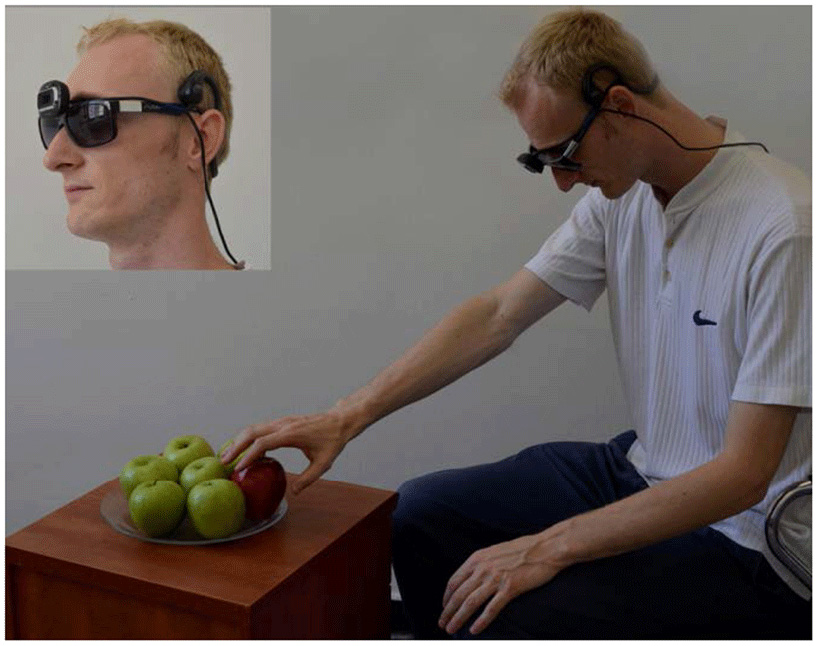

In another study, sighted participants moved their hands to targets that were either seen on the computer screen, or heard via the EyeMusic. It turns out that in both cases, the movements were highly accurate, and on average, their movements were off by less than 0.5 cm (0.2'). This was after very little training (as little as 25 min of experience with the EyeMusic). We anticipate that with further training, they would do even better than that. In fact, one blind long-term user of the EyeMusic was able to find a red apple in a pile of green apples, and pick it out in a swift and accurate movement (see an illustration in Figure 2).

- Figure 2 - An illustration of the EyeMusic sensory substitution device (SSD).

- The user wears glasses, with a camera hooked on them, and hears the images captured by the camera through bone-conductance headphones. Inset: close-up of the glasses–mounted camera and headphones.

In summary, sensory substitution gives researchers a useful tool to study the brain, while showing promise as a means to help blind individuals perceive and interact with their environment.

References

[1] ↑ Levy-Tzedek, S., Novick, I., Arbel, R., Abboud, S., Maidenbaum, S., Vaadia, E., et al. 2012. Cross-sensory transfer of sensory-motor information: visuomotor learning affects performance on an audiomotor task, using sensory-substitution. Sci. Rep. 2:949. doi: 10.1038/srep00949

[2] ↑ Levy-Tzedek, S., Hanassy, S., Abboud, S., Maidenbaum, S., and Amedi, A. 2012. Fast, accurate reaching movements with a visual-to-auditory sensory-substitution ‘device’. Restor. Neurol. Neurosci. 30:313–23. doi: 10.3233/RNN-2012-110219

[3] ↑ Striem-Amit, E., Guendelman, M., and Amedi, A. 2012. ‘Visual’ acuity of the congenitally blind using visual-to-auditory sensory substitution. PLoS ONE 7:e33136. doi: 10.1371/journal.pone.0033136

[4] ↑ Reich, L., Szwed, M., Cohen, L., and Amedi, A. 2011. A ventral visual stream reading center independent of visual experience. Curr. Biol. 21:363–8. doi: 10.1016/j.cub.2011.01.040