Abstract

Have you ever wondered how, with just two ears, we are able to locate sounds coming from all around us? Or, when you are playing a video game, why it seems like an explosion came from right behind you, even though you were in the safety of your own home? Our minds determine where sound is coming from using multiple cues. Two of these cues are (1) which ear the sound hits first, and (2) how loud the sound is when it reaches each ear. For example, if the sound hits your right ear first, it likely originated to the right of your body. If it hits both ears at the same time, it likely originated from directly in front or behind you. Creators of movies and video games use these cues to trick our minds—that is, to give us the illusion that certain sounds are coming from specific directions. In this article, we will explore how your brain gathers information from your ears and uses that information to determine where a sound is coming from.

The Physical Elements of Sound

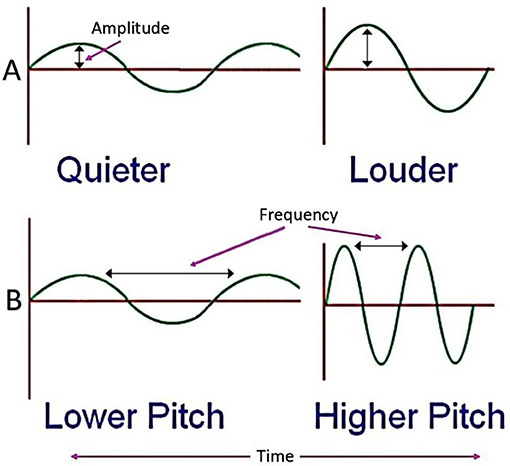

Our ability to hear is crucial for providing information about the world around us. Sound is produced when an object vibrates the air around it, and this vibration can be represented as a wave that travels through space. For example, if a branch falls off a tree and hits the ground, the air pressure around the branch changes when it hits the earth and, as a result, the vibration of the air produces a sound originating from the collision. One thing that many people do not realize is that sound waves have physical properties and are therefore influenced by the environment in which they occur. In the vacuum of space, for instance, sounds cannot occur because, in a true vacuum, there is nothing to vibrate and cause a sound wave. The two most important physical qualities of sound are frequency and amplitude. Frequency is the speed at which a sound wave vibrates, and it determines the pitch of a noise. Higher frequency sounds have a higher pitch, like a flute or a bird chirping, while lower frequency sounds have a lower pitch, like a tuba or a large dog barking. The amplitude of a sound wave can be thought of as the strength of the vibrations as they travel through the air, and it determines the perceived loudness of the sound. As you can see in Figure 1, when the peak of the sound wave is smaller, the sound will be perceived as quieter. If the peak is larger, then the sound will seem louder. It might even help to think of sound waves like waves in an ocean. If you stand in still water and drop a pebble near your legs, it will cause a small ripple (a tiny wave) that does not affect you much. But if you stand in the ocean during stormy weather, the large incoming waves may be strong enough to knock you down! Just like the size and strength of water waves, the size, and strength of sound waves can have a big effect on what you hear.

- Figure 1 - Amplitude and frequency represented as waves.

- (A) Amplitude is the strength of the vibrations as they travel through the air; the greater the amplitude, the louder the sound is perceived by the observer. (B) Frequency is the speed at which a sound wave vibrates, which determines the perceived pitch of the noise; the greater the frequency, the higher the pitch of the sound.

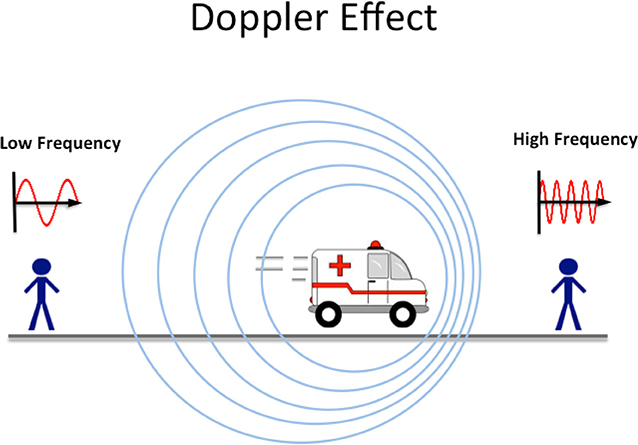

Sound waves interact in fascinating ways with the environment around us. Have you ever noticed how an ambulance’s siren sounds different when it is in the distance compared with when the ambulance approaches and passes you? This is because it takes time for sound to travel from one point to another, and the movement of the sound source interacts with the frequency of the waves as they reach the person hearing it. When the ambulance is far away, the frequency of the siren is low, but the frequency increases as the ambulance approaches you, which is a phenomenon known as the Doppler effect (see Figure 2).

- Figure 2 - How sound wave frequencies are affected (and perceived) as a siren approaches or travels away from an individual.

- As the ambulance approaches an individual, the frequency of the sound increases and therefore is perceived as having a higher pitch. As the ambulance drives further away from an individual, the frequency decreases, causing the sound to be perceived as having a lower pitch.

Sound is not only affected by distance, however, but also by other objects. Think back to a time when someone was calling for you from another room. You probably noticed that it was harder to hear them from another room than when he or she was right next to you. The distance between you is not the only reason a person is harder to hear when he or she is in another room. The person is also harder to hear because the sound waves are being absorbed by objects in the environment; the further away the person calling you is, the more objects there are in between you two, so less of the sound waves eventually reach your ears. As a result, the sounds may appear to be quiet and muffled, even when the person is yelling loudly.

Structure of the Ear

Our ears are complex anatomical structures that are separated into three main parts, called the outer ear, middle ear, and inner ear. The outer ear is the only visible part of the ear and is primarily used for funneling sound from the environment into the ear canal. From there, sound travels into the middle ear, where it vibrates the eardrum and three tiny bones, called the ossicles, that transmit sound energy to the inner ear. The energy continues to travel to the inner ear, where it is received by the cochlea. The cochlea is a structure within the ear that is shaped like a snail shell, and it contains the Organ of Corti, where sensory “hair cells” are present that can sense the sound energy. When the cochlea receives the sound, it amplifies the signal detected by these hair cells and transmits the signal through the auditory nerve to the brain.

Sound and the Brain

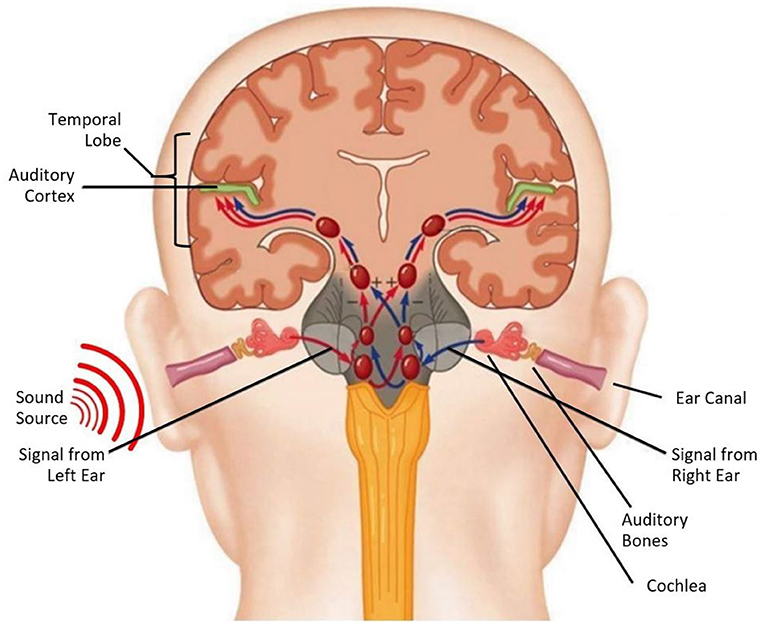

While the ears are responsible for receiving sound from the environment, it is the brain that perceives and makes sense of these sounds. The auditory cortex of the brain is located within a region called the temporal lobe and is specialized for processing and interpreting sounds (see Figure 3). The auditory cortex allows humans to process and understand speech, as well as other sounds in the environment. What would happen if signals from the auditory nerve never reached the auditory cortex? When a person’s auditory cortex is damaged due to a brain injury, the person sometimes becomes unable to understand noises; for instance, they may not understand the meaning of words being spoken, or they may be unable to tell two different musical instruments apart. Since many other areas of the brain are also active during the perception of sound, individuals with damage to the auditory cortex can often still react to sound. In these cases, even though the brain processes the sound, it is unable to make meaning from these signals.

- Figure 3 - Diagram of a sound source traveling through the ear canal and turning into neural signals that reach the auditory cortex.

- The sound is directed into the ear canal by the outer ear, and is later turned into neural signals by the cochlea. This signal is then transmitted to the auditory cortex, where meaning is assigned to the sound.

Hearing Sound from Over Here, or Over There?

One important function of human ears, as well as the ears of other animals, is their ability to funnel sounds from the environment into the ear canal. Though the outer ear funnels sound into the ear, this is most efficient only when sound comes from the side of the head (rather than directly in front or behind it). When hearing a sound from an unknown source, humans typically turn their heads to point their ear toward where the sound might be located. People often do this without even realizing it, like when you are in a car and hear an ambulance, then move your head around to try to locate where the siren is coming from. Some animals, like dogs, are more efficient at locating sound than humans are. Sometimes animals (such as some dogs and many cats) can even physically move their ears in the direction of the sound!

Humans use two important cues to help determine where a sound is coming from. These cues are: (1) which ear the sound hits first (known as interaural time differences), and (2) how loud the sound is when it reaches each ear (known as interaural intensity differences). If a dog were to bark on the right side of your body, you would have no problem turning and looking in that direction. This is because the sound waves produced by the barking hit your right ear before hitting your left ear, resulting in the sound being louder in your right ear. Why is it that the sound is louder in your right ear when the sound comes from the right? Because, like objects in your house that block or absorb the sound of someone calling you, your own head is a solid object that blocks sound waves traveling toward you. When sound comes from the right side, your head will block some of the sound waves before they hit your left ear. This results in the sound being perceived as louder from the right, thereby signaling that that is where the sound came from.

You can explore this through a fun activity. Close your eyes and ask a parent or friend to jingle a set of keys somewhere around your head. Do this several times, and each time, try to point to the location of keys, then open your eyes and see how accurate you were. Chances are, this is easy for you. Now cover up one ear and try it again. With only one ear available, you may find that the task is harder, or that you are less precise in pointing to the right location. This is because you have muffled one of your ears, and therefore weakened your ability to use signals about the timing or intensity of the sounds reaching each ear.

Immersive Audio in Games and Movies

When audio engineers create three-dimensional audio (3D audio), they must take into consideration all the cues that help us locate sound, and they must use these cues to trick us into perceiving sound as coming from a particular location. Even though with 3D audio there are a limited number of physical sound sources transmitting via headphones and speakers (for example, only two with headphones), the audio can seem like it is coming from many more locations. 3D audio engineers can accomplish this feat by accounting for how sound waves reach you, based on the shape of your head and the location of your ears. For example, if an audio engineer wants to create a sound that seems like it is coming from in front of you and slightly to the right, the engineer will carefully design the sound to first start playing in the right headphone and to be slightly louder in this headphone compared with the left.

Video games and movies become more immersive and life-like when paired with these tricks of 3D audio. When watching a movie, for example, sets of speakers within the movie theater can focus the sound direction to allow for a match between what you are seeing and what you are hearing. For example, imagine that you are watching a movie and an actress is having a phone conversation on the right side of the screen. Her speech begins to play mostly through the right speakers, but as she moves on the screen from right to left, the sound follows her gradually and smoothly. This effect is the result of numerous speakers working in tight synchrony, to make the 3D audio effect possible.

Virtual reality (VR) takes this immersive experience to a higher level by changing the direction of the sound based on where you are looking or are positioned in virtual space. In VR, by definition, you are virtually placed in a scene, and both the visual and auditory experiences should mirror your experience of the real world. In a successful VR simulation, the direction of your head movements and where you are looking determine where you perceive the audio as originating from. Look directly at a space ship and the sound of its engines come from straight ahead of you, but turn to the left and now the sound comes at you from the right. Move behind a big object and now the virtual sound waves hit the object directly and hit you indirectly, dampening the sound and making it more seem muffled and quieter.

Conclusion

Research scientists and professionals in the film and video game industry have used simulated sounds to learn more about hearing, and to enhance our entertainment experiences. Some scientists focus on how the brain processes sounds, while others analyze the physical properties of sound waves themselves, such as how they bounce or are otherwise disrupted. Some even investigate how other animals hear and compare their abilities to our own. In turn, professionals in the film and video game industries have used this research to help make the experience of movie-goers and gamers more immersive. In virtual environments, designers can make virtual sound waves behave like sound waves do in real life. When you are playing a video game or watching a movie, it is easy to take for granted the research and time that went into creating this experience. Maybe the next advancement in immersive sound technology will start with you and your own curiosity about sound waves and how the auditory system works!

Glossary

Amplitude: ↑ The size of the sound wave; the attribute of a sound that influences the perceived loudness of that sound.

Pitch: ↑ The quality of sound that is experienced as a function of the frequency or speed of the vibrations; the perceived degree of highness or lowness of a tone or sound.

Doppler Effect: ↑ An increase or decrease in the frequency of a sound wave as the source of the noise and observer move toward or away from each other.

Cochlea: ↑ A (mostly) hollow tube in the inner ear that is usually coiled like a snail shell and which contains the sensory organs of hearing.

Auditory Cortex: ↑ The area of the brain located in the temporal lobe that processes information received through hearing.

Interaural Time Difference: ↑ The difference in the arrival time of sound received by the two ears.

Interaural Intensity Difference: ↑ The difference in the loudness and frequency of a sound received by the two ears.

Three-Dimensional Audio: ↑ A group of sound effects that are used to manipulate what is produced by stereo speakers or headphones, involving the perceived placement of sound sources anywhere in a three-dimensional space.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.