Abstract

Your brain controls everything you do, and it is much more powerful than any computer you can find. This complex organ sends messages using cells called neurons, and it never stops analyzing data, even as you sleep. Scientists are trying to understand the brain to create a digital version. But is it possible for computers to do the same things our brains can? For computers to do so, we need to create something called an artificial neural network, which has digital neurons connected into a complex net that resembles the structure of the brain. To make an artificial neural network, we need to use the most universal language—mathematics.

Can Computers Do the Work of the Brain?

For many years, we have used computers to work, play, communicate, watch movies, and do plenty of other things. Every year, we develop computers that are better and faster. However, this is not enough for us—we dream of supercomputers with computing power that go beyond our imagination. Such computers would allow us to manipulate much more data in a short time. Scientists get inspiration for these powerful computers from the way the brain is built: full of cells called neurons, connected to each other in a huge net in which electrical impulses transfer data between the neurons. Scientists are trying to create a digital version of the brain’s natural neural network. They create artificial neurons that are connected within a huge network in which, instead of the electrical impulses used in our brains, data is represented by digital numbers in electronic circuits. This is called an artificial neural network, and these networks can perform some tasks, like image recognition, very efficiently. But we have still not reached the limit of what computers can do with artificial neural networks. Maybe 1 day, computers will be able to think like humans!

A Human’s On-Board Computer

Did you know that, in the span of just 1 s, the brain can receive billions upon billions of signals? That is a huge amount of data flowing through the brain every single second! The human brain is our on-board computer, and it is the most complex machine that has ever existed. It is much smaller than a soccer ball but has more cells than there are stars in the Milky Way! If the body were a ship, the brain would be the captain. The brain can adapt very quickly to completely new, unfamiliar situations. It can also recognize objects much faster than the world’s best computers can. When you see the face of your best friend, your brain recognizes that face faster than a mosquito flaps its wings. Computers can recognize multiple complicated patterns, but even the fastest computer cannot yet compete with the human brain. Scientists from various fields are still trying to understand all the processes that take place in the brain when it carries out tasks like pattern recognition.

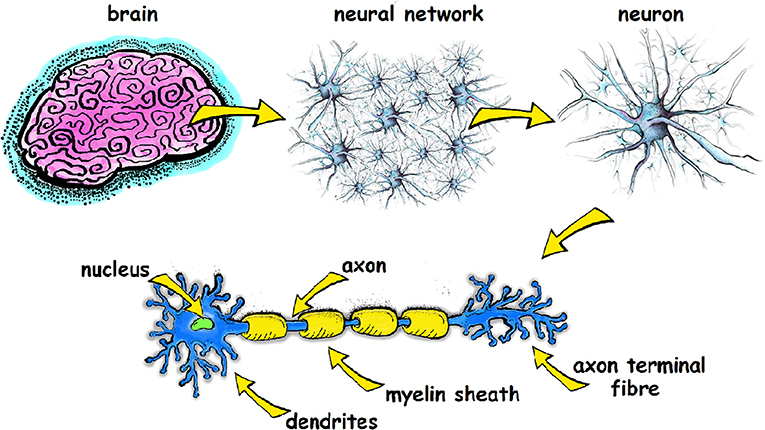

As we mentioned, neurons are brain cells that can conduct electric impulses to transmit information to other cells. Neurons have a cell body with a nucleus and long, wire-like structures that extend from the cell body to transmit electrical signals. These wire-like structures can be divided into two groups: dendrites, which receive data from other neurons and conduct the data toward the cell body; and axons, which convey data away from the cell body through to other neurons. Dendrites are much shorter than axons, and axons often have a fatty covering called a myelin sheath, which acts as the insulation around a wire, helping the electrical signal to flow. The place where two neurons meet to transfer signals from one to the other is called the synapse, see Figure 1.

- Figure 1 - The brain is composed of many neurons connected in a complex neural network.

- Each neuron has a cell body, containing the nucleus, and extensions from the cell body called the axon and dendrites. Dendrites receive electrical signals coming into the cell, while the axon transmits an electrical signal away from the cell, toward other neurons.

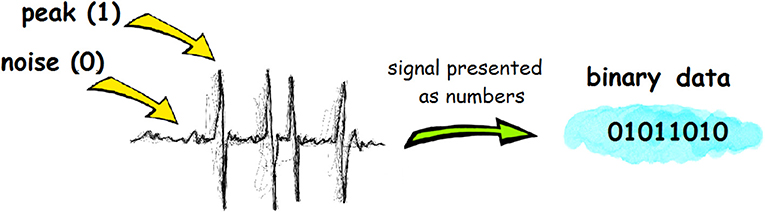

Even when neurons are not sending an electrical impulse to other neurons, there is always a low, background level of electrical activity traveling along neurons. Scientists call this background electricity “noise,” and when equipment is used to graph the electrical activity of neurons, the noise appears as a slightly squiggled line. When the neuron transmits an electrical signal, this is seen as a sharp spike or a peak on the graph (Figure 2). Thus, we can think of the neuron as existing in one of two states, either “off” (noise) or “on” (sending a sharp electrical signal) [1]. These states can be represented in the language of mathematics with two symbols: “0” (off) and “1” (on). The language of 1 and 0s is known as binary language, and it is also the language of computers!

- Figure 2 - Electrical impulses can be represented with binary language, as a series of 1 and 0s.

Creating an Artificial Neural Network

Imagine creating a large, 3D structure from pipes of various shapes and sizes. Each pipe can be connected to many other pipes and has a valve that can be opened or closed. As a result, you end up with a million combinations of pipe connections. That sounds complicated, does not it? Now, let is connect the pipe contraption to a water tap. Pipes of different sizes will allow the water to flow at different speeds, and if the valves are closed, the water will not flow. The water represents the data that is transferred in the brain, while the pipes represent neurons. What about the valves? They represent the connections between neurons—the synapses. Scientists are trying to create a digital brain that connects digital neurons like our imaginary water pipes. Based on the binary coding of neurons we described in the previous section, they hope to create a thinking machine that is an accurate electronic version of a brain, full of digital neurons working together in a large, efficient, reliable network: an artificial neural network.

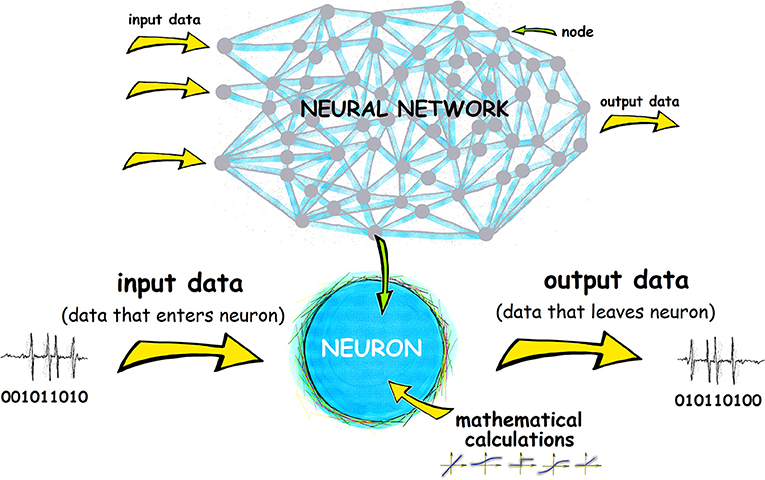

The digital neurons that make up an artificial neural network are called nodes. Each node has a special feature, called weight, which is programmed by the developers. The weight of a node can be compared to the valves in our imaginary pipe structure, or the synapses in the brain—valves regulate the strength of the incoming signal. Now let is imagine that the pipes in our structure lead to a tank. The tank represents an artificial neuron. Each valve regulates the amount of water entering the tank. The total amount of water coming in from the various pipes is the “input” to the tank, which is called the input signal in an artificial neural network. The valves represent the weights of nodes, which regulate the strength of the signals coming into the nodes from the environment and other neurons. Meanwhile, the tank filled with water is like output data—the images, signals, sounds that the brain receives from the outside world.

Each node in the artificial neural network has multiple inputs, representing inputs signals from the environment or other neurons. When the network is active, the node receives different data (signals, which can be represented by the numbers) through each input and multiplies the numbers by their assigned weight. The node then sums up all the input signals to obtain the total, which is the output signal. Remember how we explained that neurons regularly experience a low level of electrical noise, which is not strong enough to transmit a signal? Well, in the artificial neural network, if the output signal is below a predefined threshold, the node does not pass data on to the next layer—it is considered noise. If the number exceeds the threshold, the node sends the output signal to the next layer—the same way a signal is sent across a synapse when the electrical activity is high enough. This all happens in the binary language of 1 and 0s.

To design a new generation of supercomputers inspired by the human brain, we need to have a large neural network built from artificial neurons (Figure 3). Creating an artificial neural network would not be possible without using mathematics to represent the way real neurons function.

- Figure 3 - An artificial neural network is made up of artificial neurons, called nodes, which perform calculations on input data and pass on the results of those calculations as output data.

Artificial Neurons: Then and Now

The node, or artificial neuron, is the basic unit of an artificial neural network. The first artificial neuron was proposed in 1943, by Warren McCulloch and Walter Pitts. This simple artificial neuron is called a perceptron. Data enters the perceptron, undergoes mathematical calculations, and then leaves the perceptron. Artificial neurons can be arranged in several layers so that each layer performs different calculations. The last layer is the output layer; all the other layers are called input neurons. Input neurons do not make final decisions, they only analyze the details of the input signal and pass the information on to the next layer for further analysis. This is the simplest form of an artificial neural network, but scientists are trying to build very complex networks that connect many neurons which, unlike perceptrons, can perform advanced calculations, just like neurons in our brains.

Conclusions

Artificial neural networks are created to digitally mimic the human brain. They are currently used for complex analyses in various fields, ranging from medicine to engineering, and these networks can be used to design the next generation of computers [2]. Artificial neural networks are already a critical part of the gaming industry. What else can we do with artificial neural networks? We can use them to recognize handwriting, which can be useful in industries such as banking. Artificial neural networks can also do many important things in the field of medicine. We could use them to build models of the human body that could help doctors accurately diagnose diseases in their patients. And thanks to artificial neural networks, complex medical images, such as CT scans, can be analyzed more quickly and accurately. Machines based on neural networks will be able to solve many abstract problems on their own. They will learn from their mistakes. Maybe 1 day we will be able to link humans to machines through a tool called a brain-computer interface! This would transform human thoughts into signals that could control the machines. Perhaps, in the future, we will only need to use our thoughts to interact with our environment.

Glossary

Neuron: ↑ A cell of the brain and nervous system, responsible for sending signals to other cells.

Artificial Neural Network: ↑ A digital version of the brain’s neural network that carries out mathematical calculations within elements called nodes.

Dendrite: ↑ a neuron extension, which receives stimuli and transmits signals to the other neurons.

Axon: ↑ A long, thin structure responsible for the generation and processing of the signal in the neuron.

Synapse: ↑ A communication point, in which sending neurons transmit the message to receiving neurons.

Binary Language: ↑ A machine language, which represents data using a two-symbol system (0; 1).

Node: ↑ The element of an artificial neural network that acts as a digital version of a neuron.

Weight: ↑ A special tool, which enables modification of the signal.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

[1] ↑ Gerstner, W., Kistler, W. M., Naud, R., and Paninski, L. 2014. Neuronal Dynamics: From Single Neurons to Networks and Models of Cognition. Cambridge: Cambridge University Press..

[2] ↑ Ghosh-Dastidar, S., and Adeli, H. 2009. Spiking neural networks. Int. J. Neural Syst. 19:295–308. doi: 10.1142/S0129065709002002