Abstract

In the field of artificial intelligence, which has a large influence on our lives today, the term “neural network” has been gaining popularity. It usually refers to a dense web of simple units, each of which may be in one of two states (on/off) and impacts of the state of other units connected to it. What exactly is the relationship between the way a nerve cell acts and the way artificial intelligence works? How is the action of an artificial neural network similar to a network of neurons in the human brain? This article tries to answer these questions. We will summarize how thinking happens, provide a short description of how a nerve cell works, describe the similarities between a nerve cell and a basic unit of a logical system, and finally show how connecting several such units is the basis for artificial intelligence.

Thinking Involves Linking Concepts Together

From the dawn of culture, humans have been intrigued by their ability to think, perceive, classify, and make decisions. A common notion in the fields of psychology and philosophy is that our thoughts and ideas are abstract, basic concepts that become linked to each other in different combinations as we experience the world around us. The name of this notion is associationism. According to associationism, thinking is a kind of roaming around inside an interconnected network of concepts that are joined to each other with various strengths. The trigger for roaming may be an external, sensory input such as sight, sound, smell, or touch.

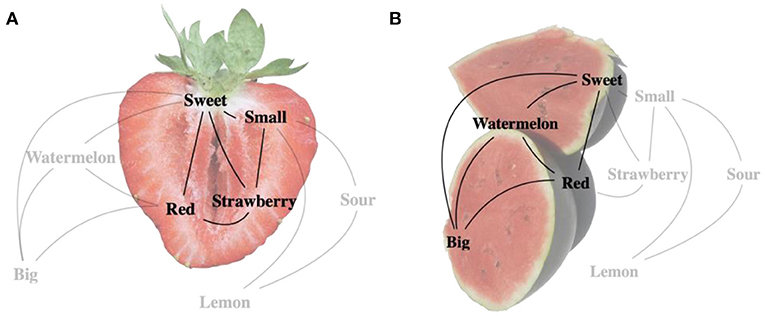

For instance, let us imagine a group of concepts: sour, sweet, small, big, red, lemon, strawberry, and watermelon (Figure 1). Associationism implies that when the image of a strawberry comes to mind, there is a co-activation of associated, strawberry-related concepts, such as red, small, and sweet (but not lemon, big, or watermelon). The strength of the connections between associated concepts is built up by our interactions with the world around us. The more we experience a sweet taste occurring together with the sight of a fruit that happens to be red, the stronger the connection becomes between those three concepts.

- Figure 1 - The thinking process can be described as roaming around inside a network of basic concepts that are connected in various combinations and strengths, determined by our experiences.

- (A) Black lines connect basic concepts that, together, represent the concept of “strawberry.” Gray lines represent weak connections to basic concepts that do not belong to “strawberry.” (B) Black lines connect basic concepts that represent the concept “watermelon.” The gray lines represent weak connections to basic concepts that do not belong to “watermelon” (Figure credit: Renee Comet; public domain, via Wikimedia Commons).

How Nerve Cells Work

Associationism is interesting to scientists who are trying to understand how the human brain works. What happens inside the brain when we grasp a concept such as “strawberry”? The journey toward an answer to this question began back in 1835, following the development of methods that allowed high-quality microscopic observation of brain tissues. These early observations showed that the brain is made up of nerve cells, also known as neurons, that are connected to each other through fine fibers [1]. Over the years, these findings led to the development of a hypothesis called the neuron doctrine, which links associationism to the structure of the brain. Think about it this way: a concept (such as red, or small, or sweet) is represented in the brain by the activation of neurons. Fibers connect clusters of neurons, and this supports the association between concepts. Unique groups of neurons become active when we think of a complex concept or see an object in front of our eyes, like a strawberry or a watermelon (Figure 1).

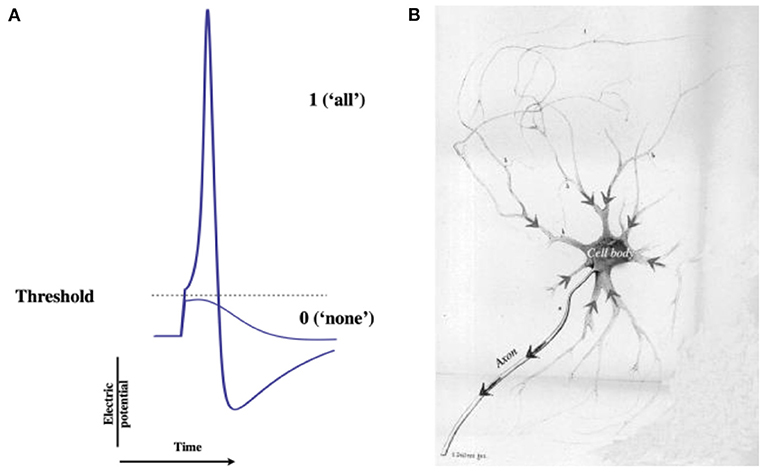

But what actually happens in a nerve cell when the concept red comes to mind? The first clues came in 1926, with the development of advanced electronics that allowed identification and characterization of electric signals in nerves. These electrical signals happen when a nerve cell is active [2], and they involve a tiny change in the voltage measured between the two sides of the nerve cell’s membrane. Specifically, the watery solution between all the body’s cells (neurons included) is similar to sea water, with high concentrations of sodium ions (Na+) and low concentrations of potassium ions (K+). Na+ and K+ are common ions that carry positive electrical charges. Inside the nerve cell, K+ is in high concentration and Na+ is in low concentration. This difference between the internal and external ion concentrations leads to a voltage difference between the inside and outside of the cell, much like the difference between the two poles of a battery. Measuring the voltage showed that the electrical activity of a nerve cell is a very short (a fraction of a second) change in the electrical potential inside the nerve cell. This phenomenon was named a neural impulse or “spike” (Figure 2). Today we know that the spike results from the flow of Na+ and K+ ions through the cell’s membrane. To create a neural impulse, the nerve cell must receive a stimulation that is stronger than a certain threshold; if the stimulation is not strong enough, nothing happens.

- Figure 2 - Nerve impulse and nerve cell.

- (A) The shape of a nerve impulse. When a stimulation crosses a threshold, a neural impulse is created, with a fixed shape and strength (1; “all”). If the stimulation does not cross the threshold, no neural impulse will be created (0; “none”). (B) A painting of a nerve cell showing stimulatory signals moving toward the nerve cell’s body where, if the sum of the signals exceeds the threshold value, a neural impulse is created, which is transferred to nearby nerve cells through a fiber called the axon, generating inputs to those cells (Image credit: Karl Deiter, 1834–1863; arrows and English text added).

This “all-or-nothing” response of the nerve cell is similar to an on/off switch for a light. We can think about it in numerical terms as 1 (all) or 0 (nothing). This means that the strength of the stimulation causing the neural impulse does not affect the size of the neural impulse. These neural impulses allow us to feel, think, move, and more. Note that the electrical activity in the brain results from electrical impulses in many nerve cells simultaneously.

At this point, there was still an important unanswered question: how exactly does a web of on/off switches explain how humans think and act intelligently? The search for an answer to this question has given rise to an exciting field of research that influences our daily lives—artificial intelligence (AI). Artificial intelligence technologies help people in many fields, including science and engineering. These technologies can be used to perform tasks ranging from identifying and sorting medical data and images, to the control of complex machines like autonomous cars. The origin of artificial intelligence may be traced back to 1943, where researchers suggested a possible connection between two very different scientific domains: mathematical logic and the operation of networks of neurons [3]. But what is the unique connection between these two domains?

Logical Systems and Artificial Intelligence

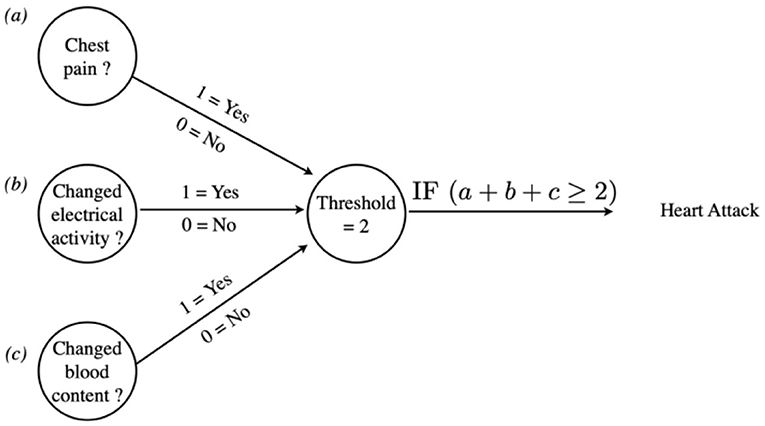

A significant part of the thinking process may be described as a chain of statements that undergo true/false tests. To illustrate, let us use an example of the thinking process a doctor uses when a patient comes to her office and complains of having chest pains. The physician suspects that the cause of the chest pain is a heart attack. But to diagnose a heart attack, at least two of the following statements must be true: [a] Pains in the chest—yes or no? [b] Changes in the electrical activity of the heart—yes or no? [c] Changes in of the amount of a particular protein in the blood—yes or no? This means if the patient only complains about chest pain (meaning the answer to [a] is “yes”), this is not enough to diagnose a heart attack; the doctor will diagnose a heart attack only if the answer to statements [b] and/or [c] is “yes,” in addition to the chest pain. The doctor makes the diagnosis based on a logical expression: “if [a] and [b] are true, or [a] and [c] are true, or [b] and [c] are true, or [a] and [b] and [c] are true, then there is a heart attack.”

So, since a nerve cell responds in an all-or-nothing way and can only be in one of two states (0—not active, or 1—active), could a network of nerve cells represent the process of diagnosing a heart attack? Figure 3 shows such a network. This network has two layers. The first layer, called the input layer, consists of three cells whose activity represents the three signs of a heart attack: cell [a] represents chest pains, cell [b] represents changes in the electrical activity of the heart, and cell [c] represents changes in the protein concentration in the blood. The second layer (output layer) contains only one neuron, which receives the summed input of the three cells of the first layer. The activity of this one neuron in the second layer decides whether the conditions for diagnosing a heart attack have been fulfilled (Figure 3).

- Figure 3 - Artificial neural network that can diagnose a heart attack.

- If at least two of the three conditions—(a), (b), (c)—in the input layer on the left occur, then the sum of their action (1 + 1 = 2) is equal to the threshold (2), which is enough to generate a neural impulse in the output layer (the single cell on the right), and represents a diagnosis of heart attack.

Researchers found that what a network can calculate is determined by the relationships between layers, by the strengths of the connections, and by the threshold value for activating the nerve cell. It seems that almost every logical expression can be described in terms of a neural network. This discovery was the basis for artificial intelligence, which is based on the activity of networks containing thousands of “cells” and many “layers,” and is now part of almost all areas of our lives.

Further important progress was made as researchers figured out how to “teach” the network—through experience—to identify objects presented to the input layer, group them, and make the “right” decision in the output layer. Learning is performed by changing the connections strengths between cells or changing the threshold value. For instance, in Figure 3, the network could be “trained” to identify a heart attack if, and only if, all three conditions—[a] and [b] and [c]—were true; the “learning” could be achieved by changing the threshold value of the cell in the second layer to 2.5 instead of 2.

Gazing Into the Future

Many challenges still face researchers who are interested in making analogies between the body’s neural network and artificial intelligence. Several of these challenges result from the differences that exist between an abstract, mathematical representation of a nerve cell and a real nerve cell in the body. Yet, the biggest challenge of all keeps nagging our brains—is that all a person is? Are all our feelings, thoughts, and desires—our entire personalities—merely a network of electrical and chemical components seated in the brain? This answer will probably not be supplied by artificial intelligence; we will have to keep pondering it ourselves.

Glossary

Associationism: ↑ The idea that, as we experience the world, we connect abstract, basic concepts to each other in different combinations. For example, sweet, red, and small make up the object “strawberry.”

Neuron: ↑ A brain cell capable of generating electrical impulses.

Neuron Doctrine: ↑ The idea that our ideas or concepts are represented in the brain by the activation of one or more nerve cells. Fibers connect nerve cells that represent similar basic concepts.

Ions: ↑ Charged atoms or molecules. A positively charged ion is called cation; a negatively charged ion is called anion.

Neural Impulse: ↑ A small, quick change in the voltage between two sides of the cell membrane, which results from movement of ions between the inside and outside of the cell.

Artificial Intelligence: ↑ Behavior of a computer or other machine that reflects an intelligence usually only attributed to a person. For example, a computer that plays chess, completes sentences, or summarizes an article.

Logical Expression: ↑ A chain of operations such as “if-then,” “and,” “or,” or “not,” that is performed on data that is put into the system; the result is “true” (1) or “false” (0).

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

Thank you to Ido Marom for talks and remarks during the preparation of the article.

References

[1] ↑ Chvátal, A. 2015. Discovering the structure of nerve tissue Part 2: Gabriel Valentin, Robert Remak, and Jan Evangelista Purkyně. J. Hist. Neurosci. 24:326–51. doi: 10.1080/0964704X.2014.977677

[2] ↑ Adrian, E. D., and Zotterman, Y. 1926. The impulses produced by sensory nerve endings: part 3. impulses set up by touch and pressure. J. Physiol. 61:465–83.

[3] ↑ McCulloch, W. S., and Pitts, W. 1943. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 5:115–33.