Abstract

The science of light manipulation started with the ancient Greeks, so we have had many years to develop it. Lenses and holograms are part of our everyday lives. Light and sound are very similar: they are both waves, and they both have particles associated with them. So, why do we not have lenses or displays for sound? Or do we? This article will tell the story of how sound technology is catching up with light technology. We will tell you about acoustic metamaterials, an emerging technology that is quickly becoming part of our loudspeakers, our shows, our cars, our public spaces, and our hospitals—all the places where we want control over sound and noise. The future of shaping and designing sound is in the making! Maybe someday, sound experts will even teach something to light experts!

Imagine being in the audience at a TV studio, waiting for a quiz show to start. There are two teams of three people each, sitting at desks facing each other, with a referee in between them. On the left side is “Team Light,” wearing t-shirts with an image of a lightbulb. On the right is “Team Sound,” wearing DJ hats and t-shirts with musical notes. The referee introduces the rules: teams score points by telling the audience about things that make their topic special.

Round 1: Interference and Diffraction

Team Light is the first to hit the buzzer. “Light is a wave!” shouts their captain. Looking around proudly, the captain adds, “This means we can experience interference or diffraction.”

“Correct!” says the referee, giving Team Light a point. She then asks, “Could you please explain what those words mean?”

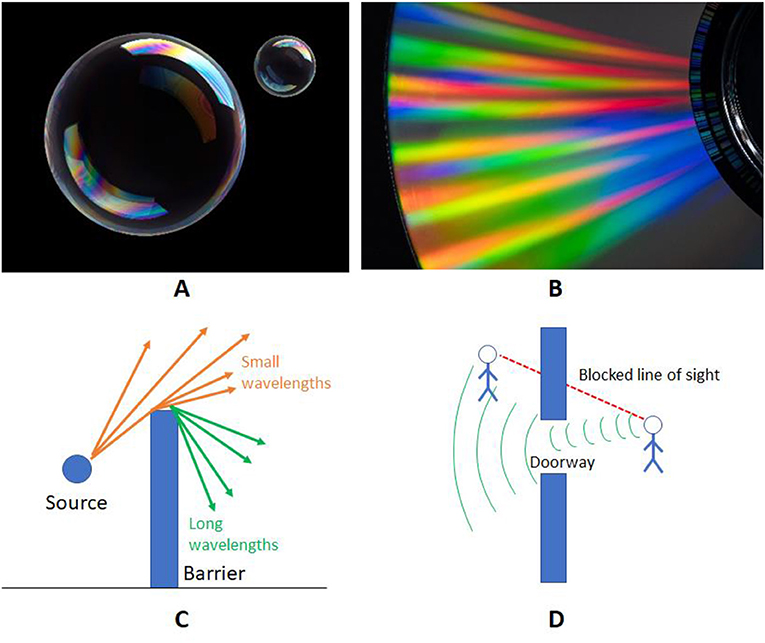

Team Light replies, “Light travels as a wave, with high peaks and low troughs just like waves on the ocean. Wavelength is the distance between one peak in the wave and the next. Interference happens when two waves meet in the same space and affect each other. A scientist named Thomas Young demonstrated this really well in a famous experiment called the “two slit experiment”. He showed that when the peaks of two waves line up, they add together, creating a bigger wave and brighter light. When peaks meet up with troughs, the peaks and troughs cancel out, creating a smaller wave and dimmer light. Interference is what makes soap bubbles and peacock feathers look so colorful. In bubbles, constructive interference happens between the waves reflected from the outside surface and those reflected from the inner surface, so that each color (i.e., each wavelength) appears very strong in some places and very dim close by (Figure 1A).”

- Figure 1 - (A) Interference pattern over the thin film of a soap bubble.

- (B) Diffraction on the surface of a CD. (C) Sound diffraction can cause traffic noise to make it past a barrier, because the wavelength of the low-frequency noise is bigger than the barrier. This “leakage” of sound is a common problem when designing barriers for motorways, which is solved by adding hat-shaped elements on top of the barrier: these trap the long wavelengths and therefore make the barrier more effective. (D) Sound can also “go around a corner,” even when line of sight is blocked. This happens because diffraction transforms the doorway into a source of sound itself (photograph credits: A, B iStockphoto.com).

“That is so interesting!” says the referee. “Can you tell us more about diffraction?”

Team Light’s second member sits up proudly. “Diffraction is observed when a wave encounters an obstacle and bends around it. Diffraction allows us to see sun rays even when the sun is hidden by a cloud. Diffraction can also be observed if waves are forced to pass through, or bounce against, very small openings. This can happen on the surface of a CD: white light hits the thin lines on the CD’s surface and they act like a prism, splitting light in multiple colors as it bounces off” (Figure 1B).

Just then, Team Sound presses their buzzer!

“But sound is a wave, too!” shouts their captain. “When sound waves experience interference, the same thing happens. Interference is what makes noise-canceling headphones work.” Team Sound goes on to explain that sound diffraction can enable sounds to travel in unexpected directions. For example, traffic noise can reach houses even if they are behind a barrier (Figure 1C). The famous “whispering galleries” in St. Paul’s Cathedral, London, and New York City’s Grand Central Station work the same way. A person speaking really quietly at one spot under the huge domed roof can be heard on the other side of the dome because sounds of higher frequency (like the ones produced while whispering) cling to the surface of the dome and travel longer distances than those produced while talking normally. Diffraction also makes sure that you hear your parent’s voice calling you for dinner even when playing behind a corner (Figure 1D) and that ticket holders at a concert can hear the music quite well, even if they are sitting behind a pillar.

“Fantastic!” cries the referee. “That is one point each!”

Round 2: Wave or Particle?

The second member of Team Light is next to press the buzzer. After a pause for effect, she proclaims: “Light is a wave and a particle, too!”, which receives a round of applause from Team Light’s supporters.

The referee looks puzzled. “Really?” she asks. “Tell us how!”

“It is extraordinary to imagine how something can be both a wave and a particle at the same time,” says the Team Light member. “But it is true! We call a particle of light a photon.”

“Interesting,” says the referee. “Would anyone from Team Sound like to respond?”

“Actually,” says the third Team Sound member, touching the brim of her spectacles, “In 2019, a team of scientists proved that sound waves also involve particles. They even trapped a single sound particle, called a phonon. We think that this discovery may be used in quantum computing in the future.”

“That is 2-all then!” the referee announces.

Round 3: The Role of Devices

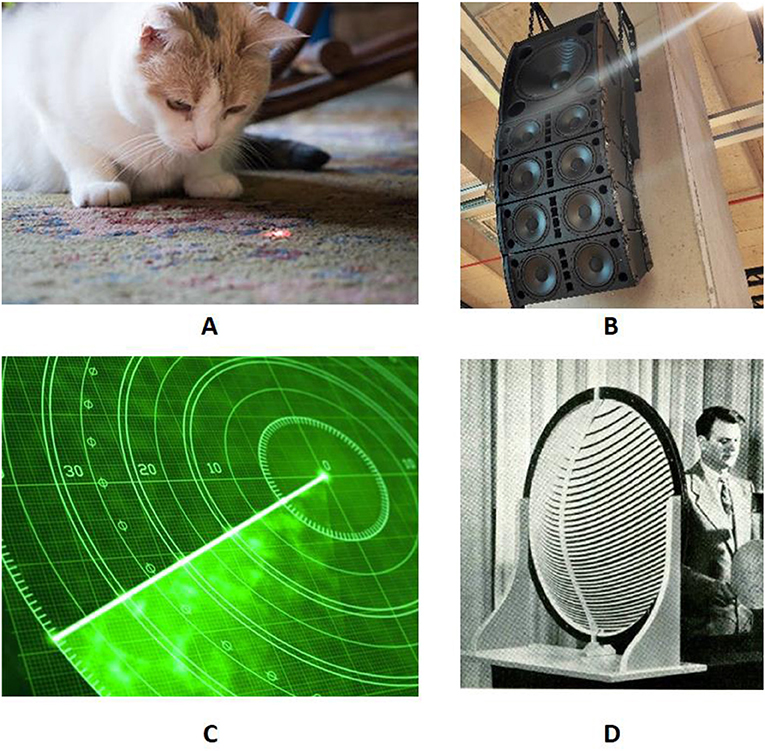

Team Light presses the buzzer again. “Light can be delivered and controlled with high precision!” says the third member. He describes that, as far back as 800 years ago, monks used magnifying glasses (which bend light) to help them copy ancient books. They describe laser pointers, used to create special effects in movies and entertainment parks (Figure 2A). Then, Team Light’s captain asks the audience, “Does anyone have a £5 note I could borrow?” An audience member hands one over, and the team captain points out the note’s hologram of Big Ben, London’s famous clock tower. “We are so good at controlling light that we can make images seem three dimensional, even though they are flat! We call these images “holograms”” he says.

- Figure 2 - We use devices based on precise light or sound delivery in our everyday lives.

- (A) A cat playing with the spot of light coming from a laser pointer. (B) Speakers can be arranged, typically along the shape of the letter “J” or of a banana, to maximize sound delivered in a certain direction, as you might have experienced at concerts or simply in the assembly room of your school. (C) Sonar uses a beam of sound to detect objects underwater, for instance obstacles in a submarine. The shape is created by interference, caused by controlling electronically the timing of different ultrasound speakers. (D) An early acoustic lens built at Bell labs in 1950s. In the picture, that can be found here, the lens (center) is as big as the engineer on the right (photograph credits: iStockphoto.com).

“After centuries of using lenses, mirrors, and filters, we have achieved such great control of light that most of us now carry a light-controlling device in our pockets, every day!”

“And what might that be?” asks the referee.

The captain pulls out a mobile phone. “This incredible device” he says smugly, pointing to the display “controls light very precisely to make images!”

Supporters of Team Light cheer enthusiastically. Surely, this is the end for Team Sound!

But Team Sound has more to say. “Until 10 years ago,” one of them says, “sound technology was very far from having such a level of control. We simply did not have the right tools. Scientists and engineers could only make sure that everyone could get the same sound—or the same silence, in the case of unwanted noise. At concerts or in auditoriums, speakers are assembled in special configurations, to make sure everyone in the audience experiences the same sound quality (Figure 2B). In cinemas, the feeling of being surrounded by sound is achieved by hiding speakers everywhere, including behind the screen. Until very recently, personalized sound delivery was only available using headphones.”

“Tell us more, Team Sound,” says the referee.

“Well,” continues the Team Sound member, “in more advanced speaker arrangements, a computer acts like the conductor of an orchestra, telling each speaker when to “sing,” and using interference to deliver sound in some places but not others. Speaker configurations like these are what make sonar work. Sonar has been used since 1930s to “see” underwater (Figure 2C). The same technology is used in hospital probes that can “see” inside the body using sound. Speakers can be used to deliver a scanning beam of sound to see where there is no light, or even a beam that follows a moving target. But this technology needs many speakers to work properly. Acoustic lenses, which would not require electronics, were explored in the 1950s, but until a few years ago they remained bulky and were only practical for high-pitched sounds, which have small wavelengths (Figure 2D). The way we worked with sound was centuries behind the way we could work with light.”

A second team member interrupts, “But then, in 2011, scientists developed acoustic metamaterials. These are common materials, like wood, plastic, or metal, engineered so that they can shape the sound that hits or passes through them. Metamaterials were first used to manipulate light in extraordinary ways—they can even make an object invisible! But use of metamaterials to manipulate sound will make a huge difference to our lives. Metamaterials now allow us to make acoustic lenses that can focus sound in a small spot, like the sun through a magnifying glass, and even acoustic holograms, which can bend sound waves into 3D complex shapes!”

The referee is impressed. “That is amazing,” she says. “But what are acoustic metamaterials?”

Welcome Metamaterials!

Here is what Team Sound has to say:

“Earlier, we talked about wavelength and how sound and light can be changed by interference and diffraction—by making light or sound waves bump into each other.

Visible light has a wavelength between 400 and 800 billionths of a meter. That is pretty small! But sound waves have much bigger wavelengths. A typical child can hear sound with a wavelength between 17 millimeters and 17 meters. Because metamaterials are engineered at a scale much smaller than the length of sound or light waves, they are tricky to make for light. But for sound, with its larger wavelengths, creating metamaterials is a lot easier. Objects that are smaller than the wavelength of sound can actually be made with a standard 3D printer!”

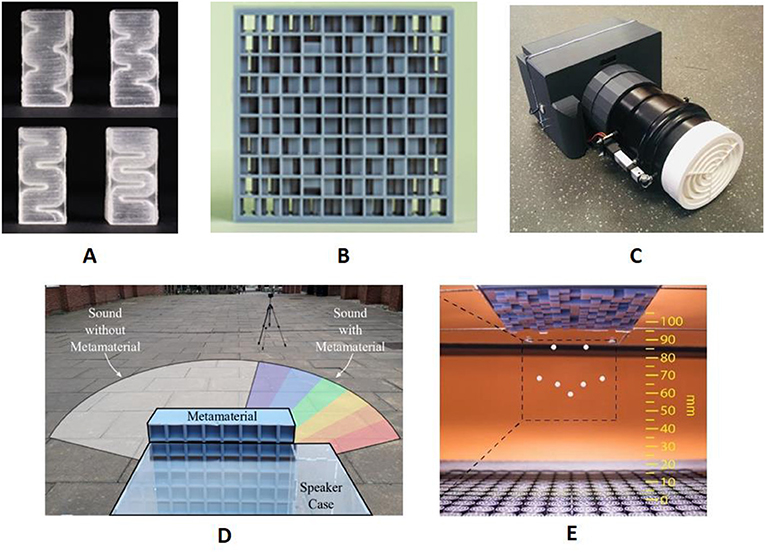

Another member of Team Sound adds, “Acoustic metamaterials are made of small parts, called unit cells or bricks (Figure 3A). The shapes of these unit cells is what does the trick: they are precisely designed so that each cell uses diffraction and interference to modify the sound going through it. We can use them to change the intensity of sound or to delay—or even trap—sound (for more information on basic metamaterials, please see this Frontiers for Young Minds article). We can now apply the knowledge gained from studying light to solving problems in acoustics.”

- Figure 3 - (A) Metamaterials bricks (2 cm × 1 cm in the picture) are designed with winding paths inside, which the sound must travel through.

- The longer the path, the longer the sound is delayed inside the brick. (B) Lenses are one possible way to assemble metamaterial bricks. Acoustic lenses could be used to send messages to single person in a crowd or to create the acoustic equivalent of a lighthouse, with a beam of sound delivering messages along a line of chairs in a theater. Each square brick in this lens is 1 cm in size, so the whole lens is as big as the hand of a human adult. (C) In this levitation experiment with multiple objects, the speakers are at the bottom and the metamaterial is on the top. The polystyrene balls are held in traps made of sound, whose shape is encoded in the metamaterial. (D) A sound projector. Two metamaterial lenses (white), like the one in (B), are attached to motors that change their mutual distance. Using a movement sensor, like the one used by the XBOX console, allows acoustic signals to be delivered to a moving person. (E) In this experiment, the metamaterial placed in front of the speaker acts like a prism, splitting melodies (that contain multiple notes) into an “acoustic rainbow,” where each note goes in a different direction, like the colors in a rainbow (photograph credits: Sussex University).

“And,” adds the team captain, “only a limited number of unit cells is needed [1]. Just like any word can be made from various combinations of just 26 letters in the Latin alphabet, we can make acoustic metamaterials from a collection of just 16 different bricks. Bricks can be used like building with LEGO®–we can assemble them into useful structures such as lenses, that can fit into the palm of the hand (Figure 3B). When unit cells work together, they can achieve incredible feats” (to read more about the acoustic superpowers of moths, see this Frontiers for Young Minds article).

He continues, “Acoustic lenses are based on diffraction but can be used to magnify and direct sound, just like lenses for light. We can use these lenses to set the direction of sound from loudspeakers and, like a lens for light creates the beam of a lighthouse, we can shape a beam of sound that travels great distances. Imagine how this might help us deliver sound effects in a cinema or theater [2]!”

The second member of Team Sound adds, “We can also combine two acoustic lenses, similar to the way optical lenses are used in cameras and telescopes. By adjusting the distance between the lenses, we can deliver sound to a specific location. We can use a programmable circuit board and a motor, in a set-up that looks like a camera, to create the acoustic equivalent of “autofocus” (Figure 3C), which we can deliver sound to a moving person, tracked by an Xbox Kinect [3] . With further development, sound delivery in a crowded school cafeteria will be possible. Line cues could be sent to actors on stage without using headphones. Acoustic metamaterials could allow customers more privacy when talking to bank staff, doctors, receptionists, or inside a booth in a cafe.”

There is no stopping Team Sound now! The third team-mate takes over:

“We have also explored acoustic diffraction. Inspired by the rainbows on CDs, we created an experience called “acoustic rainbows” [4]. The experience is simple: a metamaterial is placed in front of a speaker, so that it splits the music going through, sending the notes in different directions (Figure 3D). We played pieces of music, made by different local composers, and asked people to describe what they felt while exploring the space in front of our metasurface. They used the language of light and vision, talking about colors rather than sounds. They told us that, while they had learnt about colors in primary school and could recognize the changes in sound during the experience, they did not have the right words to describe what they called “an acoustic rainbow.” Perhaps acoustic rainbows could be used by musicians to interact playfully with their audiences, by sending different notes to different places! This could transform our experience of music! Just imagine how our projectors could transform virtual reality experiences!”

“And finally,” concludes the captain, “3D-printed surfaces made of bricks, thinner than one wavelength, have been used to create acoustic holograms, and the precision of these sculptures made of sound has been used to levitate small objects in mid-air using sound and even multiple objects in the shape of smiling faces [5] (Figure 3E)” (to read more about levitating objects using sound, see this Frontiers for Young Minds article).

And The Winner Is…

“There is so much incredibly exciting work going on in acoustics!” says the referee. “The acoustic metamaterials you describe sound amazing and I am really looking forward to finding out more about them!” Then, Team Sound plays their trump card: “We believe that acoustic metamaterials will even give us silent hospitals and offices!” Everyone starts clapping.

With the scores standing at three-all, the referee concludes, “We will definitely need a re-match in a few years’ time. Congratulations to both teams for all your fantastic work!”

Which team do you think might come up with the next great invention? Perhaps you will decide the winner one day!

Glossary

Wavelength: ↑ This is the distance between two following peaks (or troughs) in a wave. It is typically used as unit of scale to decide how big are the objects compared to the wave. While for visible light the wavelength is very small, smaller than the smallest hair, the wavelength of the sounds we can hear varies between about 1.7 cm and 1.7 meters. It is worth noting that each primary color has its own wavelength. More on this on the article “A Science Busker Guide to sound”.

Interference: ↑ This is a phenomenon that happens when two waves of the same frequency overlap in the same location. In a nutshell, if their peaks and troughs are in the same location at the same time, they add up…and a louder sound is obtained. Otherwise, they cancel out. More on interference in the article “A Science Busker Guide to sound” and on BBC Bitesize.

Two-slit Experiment: ↑ Imagine a source of red light (on the left) in front of a screen with two vertical slits (in the middle). Light passes through the slits and we observe it on a screen on the right. When a single slit is open, on the screen appears a spot of light. When the two slits are both open, on the screen appears a pattern of lines, alternating dark and light. More can be found on Britannica Kids.

Diffraction: ↑ This is a phenomenon that occurs when a wave hits something of a similar dimension as their wavelength: many smaller waves come out from the corners. Imagine a sea wave hitting a rock. More can be found on BBC Bitesize.

Photon: ↑ This is the particle of light. The name comes from the Greek word “photos,” which means light.

Phonon: ↑ This is the particle of sound. The name comes from the Greek word “phonos,” which means sound.

Sonar: ↑ SONAR: a sound-based device that is used to determine the size and the distance of objects underwater. Originally invented for detecting submarines, it is now used to detect fishes during fishing trips, obstacles during navigation and sunken ships. It works using different acoustic sources that interfere with each other to create a scanning beam. The acronym “sonar” stands for “SOund, NAvigation and Ranging”. More on Britannica Kids.

Acoustic Lens: ↑ Acoustic lens: a “lens” is a common device when we are talking about light. For example, a magnifying glass or the lenses used in spectacles to correct shortsightedness or in telescope objectives. An acoustic lens is a device that has the same effects, but for sound. A converging lens (for sound) would focus the emission of a loudspeaker in a spot. A diverging lens (for sound) might be used to send the high-frequency of a loudspeaker over large angles. More on lenses for light on Britannica Kids.

Acoustic Metamaterials: ↑ It is a new type of materials, where the properties do not come from the chemistry of the base material, but from how it is engineered. The key point is that the engineering needs to be precise enough to work at a scale smaller than the wavelength. More on the definition of what is a metamaterial can be found here.

Conflict of Interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

GM’s work cited in this article has been funded by UK Research and Innovation through the project “AURORA” (grant EP/S001832/1).

References

[1] ↑ Memoli, G., Caleap, M., Asakawa, M., Sahoo, D., Drinkwater, B., and Subramanian, S. 2017. Metamaterial bricks and quantization of meta-surfaces. Nat. Commun. 8:14608. doi: 10.1038/ncomms14608

[2] ↑ Memoli, G., Chisari, L., Eccles, J., Caleap, M., Drinkwater, B., and Subramanian, S. 2019. “VARI-SOUND: a varifocal lens for sound,” in Proceedings of CHI 2019, Paper No. 483 (Glasgow). p. 1–14. doi: 10.1145/3290605.3300713

[3] ↑ Rajguru, C., Blaszczak, D., PourYazdan, A., Graham, T. J., and Memoli, G. 2019. “AUDIOZOOM: location based sound delivery system,” in SIGGRAPH Asia (Brisbane, QLD). p. 1–2. doi: 10.1145/3355056.3364596

[4] ↑ Graham, T., Magnusson, T. R. C., Pouryazdan, A., Jacobs, A., and Memoli, G. 2019. “Composing spatial soundscapes using acoustic metasurfaces,” in Proceedings of ACM AudioMostly AM’19 (Nottingham). p. 103–10. doi: 10.1145/3356590.3356607

[5] ↑ Polychronopoulos, S., and Memoli, G. 2020. Acoustic levitation with optimized reflective metamaterials. Sci. Rep. 10:4254. doi: 10.1038/s41598-020-60978-4