Abstract

We automatically use more than one of our senses to understand the world around us. When having a conversation, most of us use our ears to listen to what other people are saying. But you might be surprised to know that what you see is also very important in helping you to understand others. This article looks at how hearing and sight are combined and explains how both of these senses help us to understand speech. We demonstrate how important it is to see the faces of people who are talking, particularly when we are in noisy places. This topic has important implications for the wearing of face masks! It is really important that we think about how face masks may affect the ability of people to communicate with each other.

Introduction

Do you ever struggle to understand what your friends or teachers are saying when they are wearing face masks? Why is this? Nearly everyone benefits from seeing people’s faces while they are talking. The various sounds people make when they talk require them to move their mouths in unique ways, and even though you might not be aware of it, your brain understands those specific mouth shapes! The shapes make it easier for us to understand people when we can see them talking.

The brain has specialized areas that process information from the senses and allow us to understand the world. Some of these brain areas automatically combine information across multiple senses. When information from one sense (hearing, for example) is unreliable, then we automatically rely more on information from another sense (like vision). This is why you will particularly notice the benefits of seeing people speak when you are in a noisy place.

In this article, we will begin by introducing you to the difference between sensation and perception, and we will use a well-known visual illusion to demonstrate how perception can sometimes be inaccurate. This will help you to understand that what you perceive does not necessarily correspond to the information that is in the world. We will then explain how our brains combine information across different senses, particularly sight and hearing.

Making Sense of The World

Your ears pick up sound waves and you hear them as sound. Your eyes pick up light energy, which gives you sight. We call these processes sensation. What your brain then does with the information from your senses is called perception. Most of the time, we assume that what we sense and what we perceive are the same thing. But sometimes our brains come to the wrong conclusions about what is out there! Perception can be a tricky job: it involves combining what our senses are telling us with what we already know about the world, to make the best possible guess about what is happening. We can misunderstand, or misinterpret, sensations, and then perceive something that just is not true.

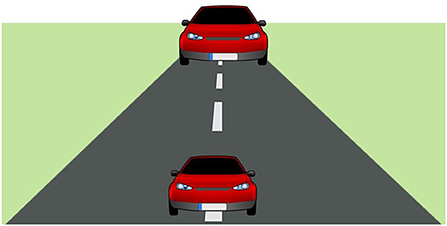

Visual illusions can be fascinating to look at, and they give us a really good example of how sometimes the brain can misinterpret sensations to give us the wrong perception. Have a look at Figure 1. Which car do you think is the biggest? Now take out a ruler and measure them. Were you right?

- Figure 1 - Visual illusion.

- These cars are the same size, yet the top car is usually perceived to be larger. This is because the cues in the picture tell us the top car is further away, so the brain automatically “scales up” the image. This example shows us that what our senses observe and what our brains perceive are not always the same.

At a first glance, you might think the top car is larger than the bottom one, but they are actually the same size! We call this a visual illusion because the picture fools your brain into thinking the cars are different sizes. This works because clues in the picture (the shape of the road, in this example) make the top car appear to be further away than the bottom car. Normally, things that are far away look smaller than things close to us (next time you are on a car journey look at the size of the cars in the distance compared to those close by), so the car at the top of the road should appear smaller because it is further away. The brain somehow knows this, so it “scales up” the perception of the car’s size automatically. In real life, this mental adjustment usually works well and helps our perception of the world to make sense.

Combining Our Senses

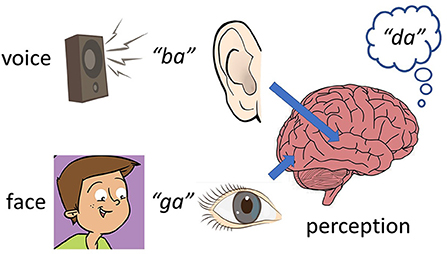

We know that our brains often use both vision and hearing to understand the world. This can make it easier for our brains to correctly determine what is going on than if we only used one sense. But the same way a visual illusion can play tricks on the brain via the eyes, an audio-visual illusion can confuse perception if the information coming from hearing and vision do not match. This was illustrated by an experiment performed by Harry McGurk and John McDonald in 1976 [1]. These scientists recorded a person saying the sound “ba.” They then made a silent video of the same person saying the sound “ga.” They played the sound recording (“ba”) alongside the video (“ga”) and asked volunteers to say what sound they heard. You might be surprised to learn that most volunteers said they heard the sound “da”! This is because their brains mashed up what they saw and heard and made a guess at what the sound really was. The amazing thing about this experiment is that it really does change the way the words sound, which is called the McGurk effect (Figure 2). The experiment showed that seeing a talking face can have a powerful effect on the sounds people perceive1. The effect is also found with other combinations of sounds. The perception of speech is really the result of the brain making the best guess it can from what we see, what we hear, and what we already know about language.

- Figure 2 - The McGurk effect.

- In this illusion, hearing one sound (e.g., “ba”) while also seeing the mouth movements that normally accompany another sound (e.g., “ga”), often results in listeners perceiving a new sound (e.g., “da”). This tells us that the brain automatically combines information across the senses, to help us understand the world.

How Sight Helps us Hear in Noisy Places

In the real world, lip movements usually match the sounds coming from people’s mouths, so seeing someone talk usually helps us to perceive the right word. This visual information tends to be more helpful for understanding speech when the conversation takes place in a noisy setting. Try sitting in a quiet room with a friend. You will probably find it quite easy to understand what your friend is saying. Now turn the television on with the volume up loud. How easy is it to understand your friend now? It is much harder to hear and understand what someone is saying if the background is very noisy. If you look at your friend’s face and lips, you are more likely to correctly guess what is being said.

Measuring How Vision Affects Understanding of Speech

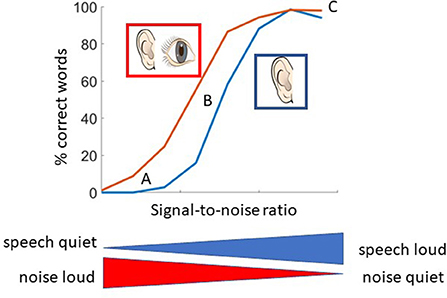

We carried out some experiments on how background noise affects understanding of a sentence [2]. First, we videoed and recorded a volunteer saying several different sentences (such as “A large size in shoes is hard to sell.”). Then we played these recordings to other volunteers and asked them to repeat what they heard, and we counted how many words they got right. We repeated this experiment with lots of volunteers, adding various levels of background noise, or reducing the volume of the speech signal. The relationship between the speech signal and the background noise is called the signal-to-noise ratio. Sometimes we only played the sound of the person speaking, and other times we also showed the video of the person, so volunteers could both see and hear the speaking person.

We plotted the information we gathered on a graph (Figure 3). When the background noise was much louder than the person speaking, our volunteers could not identify any words correctly. But when the sentences were louder than the background noise, our volunteers heard most of the words correctly. Between these two situations, volunteers could identify some, but not all of the words correctly. Our results clearly show that people can understand speech with more background noise present if they can see the faces of talkers. This effect explains your experience of seeming to hear better when you can see people speaking, especially when you are in a noisy place. Previous studies have found similar results [3].

- Figure 3 - When the background noise was much louder than the person speaking (low signal-to-noise ratio), volunteers could not identify any words correctly (A).

- But when sentences were louder than background noise, volunteers heard most of the words correctly (C). In between, volunteers could identify some, but not all, words correctly (B). When volunteers could both see and hear the person talking (red line), they got more words correct than when they could only hear the talker (blue line). So, it is easier to understand speech when we can both see and hear the speaker.

Why is this important? Imagine you are working in a noisy factory with loud, dangerous machinery. Now imagine your co-worker is trying to explain exactly how to use a machine. With all that noise in the background, do you think you would be able to hear the instructions clearly? What if your co-worker was wearing a safety mask while talking? In this sort of environment, making a mistake could be very dangerous and might even result in injury.

Benefits of Visual Information for People With Hearing Loss

Have you ever tried to understand what someone is saying by just looking at their face, without hearing their words? You may find you are better at this task if you have poorer hearing. People who are deaf or hearing impaired are generally better at lip reading than people with normal hearing [4]. This skill probably developed over time as these people lost their hearing and had to pay closer attention to their other senses.

During the coronavirus outbreak, wearing face masks was often a requirement. Masks make lip reading very difficult for people who need to do so. In the UK, campaigners helped persuade the government to provide see-through face masks to help deaf people to communicate. In our research, we try to understand how lip reading helps communication, and especially how it helps people with hearing problems.

Conclusion

Visual information is really important to help us understand the sounds we hear. What we see is especially important for understanding speech when the sound we are listening to is affected by either background noise or poor hearing. There is still a lot we do not understand about how the brain combines what we see and what we hear. Next time you are in a noisy environment, think about how much you rely on seeing the faces of the people who are speaking, and about what could be done to make visual speech information more accessible for people who have trouble hearing.

Glossary

Sensation: ↑ The process where our senses pick up information in the world.

Perception: ↑ The process where our brains make sense of information provided by the senses.

McGurk Effect: ↑ An audio-visual illusion that occurs when hearing one sound (e.g., “ba”) while also seeing the mouth movements that accompany another sound (e.g., “ga”) results perception of new sound (e.g., “da”).

Signal-to-Noise Ratio: ↑ A measure of the strength of the “signal” (in our case, speech) against the background noise.

Lip Reading: ↑ Understanding speech from mouth movements alone.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnote

1. ↑Have a look at this video for a demonstration of the effect: https://www.youtube.com/watch?v=jtsfidRq2tw&t=20s.

References

[1] ↑ McGurk, H., and MacDonald, J. 1976. Hearing lips and seeing voices. Nature 264:746–8.

[2] ↑ Stacey, P. C., Kitterick, P. T., Morris, S. D., and Sumner, C. J. 2016. The contribution of visual information to the perception of speech in noise with and without informative temporal fine structure. Hear. Res. 336:17–28. doi: 10.1016/j.heares.2016.04.002

[3] ↑ Sumby, W. H., and Pollack, I. 1954. Visual contribution to speech intelligibility in noise. J. Acoust. Soc. Am. 26:212–5.

[4] ↑ Schreitmüller, S., Frenken, M., Bentz, L., Ortmann, M., Walger, M., and Meister, H. 2018. Validating a method to assess lipreading, audiovisual gain, and integration during speech reception with cochlear-implanted and normal-hearing subjects using a talking head. Ear Hear. 39:503–16. doi: 10.1097/AUD.0000000000000502