Abstract

Speaking is such an important part of our lives. We use it to communicate with our families, friends, and even our pets! Not only do we talk a lot, but we are also very good at it. Healthy speakers can say 2–3 words per second and usually produce an error only about once every 1,000 words. To limit the number of errors we make, we are continuously monitoring our own speech. While speaking is easy, the brain process of monitoring our own speech is quite complex. In this article, we outline the process of selecting a word, understanding what happens when a speech error is made, and what could happen if the parts of the brain responsible for monitoring speech are damaged.

Speech and Speech Errors

We speak every day. We talk to our parents, our friends, and even our pets! Healthy, fluent speakers can say 2–3 words per second, which are selected from tens of thousands of words in the brain’s memory word bank—which holds over 50,000 words in adults! However, every now and then, our tongue slips and we make speech errors. Luckily, this only happens about once every 1,000 words we say [1]. The process of catching errors and correcting ourselves when we talk is more complicated than it seems. Take, for example, naming a picture of a cow. When we think of a cow, we generally think of a mammal that lives on a farm and makes milk. Even though the word “cow” comes to mind, it may not be the only word. We may accidentally say the word “horse.” The human brain has mechanisms in place to help us quickly realize when we are about to make a mistake. Researchers who study how we speak call this type of control speech monitoring.

Before we can understand how exactly we monitor our speech, let us break down what happens in the brain when we want to speak.

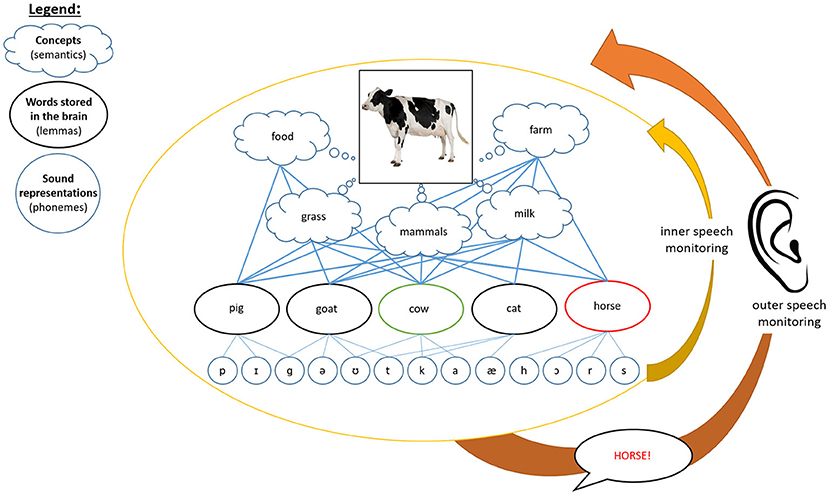

When we want to say a word like “cow,” we first need to think of the concepts relating to that word, such as “farm,” “milk,” and “mammal.” However, the concepts associated with “cow” can also be associated with other words. We may also think of a horse, cat, goat, or pig when thinking of the same concepts (Figure 1). The word representations for all these other animals and the related concepts are all active in the brain when we want to say “cow,” which can make it difficult for us to select the correct word. Then, to say the word aloud, we need to access the sound representations (called phonemes) that correspond to the words we want to say. All of this is happening very quickly in our brains, every time we speak!

- Figure 1 - What happens in our brain when we detect an error made when naming a picture?

- First, we see the picture and think of all the concepts associated with it. In parallel, other words come to mind that are associated with those same concepts. To say the word aloud, we then select a word representation and its phonemes. Meanwhile, our brain is monitoring our speech before (inner speech monitoring) and after (outer speech monitoring) we speak. At any of these steps, an error can occur. A mechanism inside the MFC monitors errors and resolves conflict within each stage.

However, speech production is not perfect. We all make mistakes when speaking, especially when there are conflicting messages in our brain about which word or sound choices may be correct. Any of the steps we just described can go wrong, leading to all kinds of speech errors. For example, if we select the wrong word—one that is related in meaning to the one we wanted to say (like saying “horse” instead of “cow”)—we made a semantic error. If, instead, we string together the wrong phonemes and say something like “cav,” we made a phonological error.

Understanding how the brain monitors speech to prevent errors is important because speech monitoring is such an important part of speaking! Some people lose the ability to monitor their speech, and researchers want to understand how speech monitoring sometimes breaks down. By studying how people speak and the types of errors they make, researchers have found two pathways that allow us to catch and potentially correct our errors before and after we speak. These pathways are known as the inner and outer loops of speech monitoring [1]. The inner loop allows us to monitor our words before we speak, to select the correct word to say (yellow in Figure 1). The outer loop monitors our speech after we have said words out loud and are able to hear whether or not we made an error (orange in Figure 1).

How Do We Study Speech and Error Monitoring in the Brain?

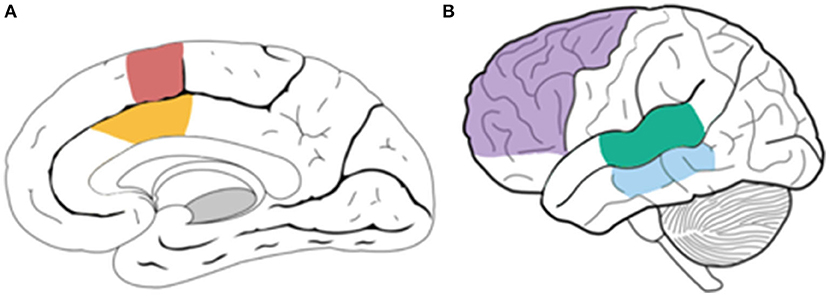

Researchers have learned about brain regions that may support speech monitoring and speech production by using brain-imaging techniques like functional magnetic resonance imaging (fMRI). fMRI measures brain activity by detecting changes associated with blood flow. When you are using a part of your brain, blood flow to that area increases. When you are not using a brain region, blood flow to that region decreases. Using fMRI, researchers found that two main brain regions seem to be particularly important in supporting speech monitoring. One is the medial frontal cortex (MFC), specifically the dorsal anterior cingulate cortex (dACC) and the pre-supplementary motor area (pre-SMA; Figure 2A). The second main region is the posterior superior temporal gyrus (pSTG; Figure 2B). The engagement of the pSTG in speech monitoring was discovered using tasks in which people’s speech was distorted while they were speaking [2]. This research suggests that when your speech is distorted, like when you hear yourself speak underwater, your pSTG is more active compared to when your speech is not distorted, like when you hear yourself speak above water. In contrast, fMRI studies showed that the dACC and the pre-SMA are activated when we hear feedback from our normal non-distorted speech, and when there is a lot of conflicting information in the brain. In addition, other brain regions are known to be very important for speech production, including the left prefrontal cortex and the left posterior middle temporal gyrus (Figure 2B). As you can see, many brain regions are important in speech production!

- Figure 2 - Brain regions that appear to be active when we monitor our speech.

- (A) Inside view of the brain. Both the dACC (yellow) and the pre-SMA (pink) seem to be involved in inner speech monitoring. (B) Outside view of the brain. The pSTG (green) seems to be associated with outer speech monitoring. The left PFC (purple) and MTG (blue) appear to play a role in speech production.

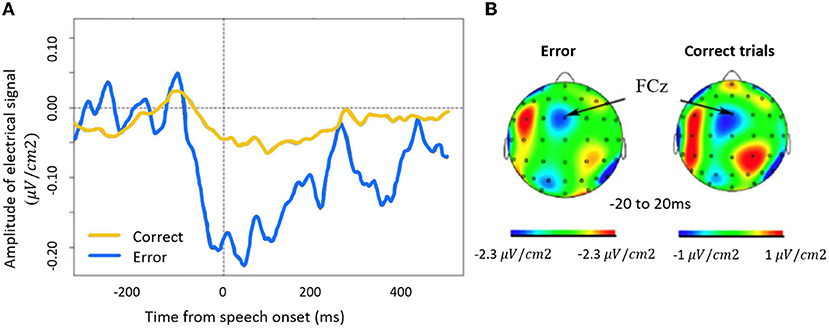

However, even though fMRI is great at telling us which parts of the brain are active, it cannot tell us precisely when these regions are active. This is really important given how fast we speak! To answer this question, researchers use another technique called electroencephalography (EEG) to record electrical activity from nerve cells in the brain, by placing electrodes on the scalp. EEG shows changes in brain activity. Scientists found an electrical signal coming from the MFC that starts to rise before we start speaking. Because it starts before we can hear ourselves speak, this signal may be associated with the inner loop of speech monitoring. Because it was first observed when speech errors were about to be made, researchers call it the error-related negativity (ERN), but it is also present before correct speech, only smaller [3]. You can see the ERN in Figure 3.

- Figure 3 - (A) Patients with damage to the prefrontal cortex were given a picture-naming task in which the ERN was measured in correct and incorrect trials using EEG.

- The X-axis shows time in milliseconds, with “0” marking the start of speech. The Y-axis shows the size of the electrical signal from the brain. The signal starts before speech onset and is larger in errors compared to when a correct response is made. (B) A topographic map showing the ERN recorded above the MFC on the scalp (Figure credit: [5], with permission).

Why Do People Lose the Ability to Monitor What They Say?

Just like we need to eat our vegetables and exercise to be strong and healthy, our brain needs lots of oxygen and nutrients to function. Blood carries oxygen and nutrients to the brain through arteries. Unfortunately, sometimes these arteries get clogged or break and cause a type of brain-tissue damage called a stroke, due to lack of sufficient oxygen or nutrients to a brain region. If this region is an area of the brain that is important for language, some people may develop difficulty speaking or understanding what is said to them.

When people lose the ability to understand or express speech resulting from damage to the brain, it is called aphasia. Some people with aphasia may no longer be aware of the speech errors they are making. Those people might find it more difficult to recover their language skills—researchers have found that the ability to detect speech errors predicts how well someone with aphasia will benefit from speech therapy [4]. This tells us that, to improve their speech, people with aphasia first need to know they are making errors. When we are trying to learn anything new, the only way for us to improve is to practice and catch our errors. However, scientists do not yet fully understand why people with aphasia can lose the ability to monitor their speech. As explained earlier, certain brain regions are involved in speech production and speech monitoring. Could it be that certain brain regions are more important than others for making us aware of our errors?

In our study [5], we asked people with brain damage in the left and right prefrontal cortex and people with no brain damage to name pictures while we recorded their brain activity. We found that people with brain damage in the left prefrontal cortex made more errors and had slower verbal response times than people with no brain damage. However, these individuals had a larger ERN, detected in the MFC, when they made errors compared to when they responded correctly (Figure 3). This told us that the left prefrontal cortex is probably not critical for the inner loop of speech monitoring, or at least not when we are simply naming pictures.

Where to Go From Here?

As you now know, a lot of work goes into producing and monitoring our speech. There is still a lot that researchers need to learn about how the brain monitors speech, and specifically about why people can lose the ability to monitor their speech after stroke. Current studies in our lab are investigating what happens when brain regions in the left temporal lobe of the brain are damaged in people with aphasia, the effects on speech-monitoring abilities and the ERN. If a person with aphasia no longer shows the expected ERN pattern, this may indicate that their ability to monitor speech is impaired. As you can see there is still so much we need to learn and discover. This is what makes language research exciting!

Glossary

Speech Monitoring: ↑ The brain process of monitoring/controlling what we say—before and after we say it—to help us prevent or identify errors in our speech.

Phoneme: ↑ A sound representation stored in the brain. Phonemes (sounds) are strung together to make whole words like stringing together letters of the alphabet!

Semantic Error: ↑ A violation in the retrieval of a word in the brain by selecting a word that shares similar features to another (i.e., saying horse instead of cow).

Phonological Error: ↑ A violation in the retrieval of a sound in the brain by selecting a sound that is similar to another (i.e., saying hat instead of cat).

Functional Magnetic Resonance Imaging: ↑ A brain-imaging technique that measures changes in blood flow to the brain.

Electroence-Phalography: ↑ A brain-imaging technique that records electrical activity from neurons in the brain.

Error-Related Negativity (ERN): ↑ An electrical signal in the brain that starts to rise before speech and is larger when speech errors are made compared to correct responses.

Aphasia: ↑ Language difficulty acquired from brain injury. There are currently eight different forms of aphasia depending on what part of the brain is affected.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work was funded by NSF CAREER award #2143805 and NIDCD grant 1R21DC016985 to SR. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Original Source Article

↑Riès, S. K., Xie, K., Haaland, K. Y., Dronkers, N. F., and Knight, R. T. 2013. Role of the lateral prefrontal cortex in speech monitoring. Front. Hum. Neurosci. 7:703. doi: 10.3389/fnhum.2013.00703

References

[1] ↑ Levelt, W. J., Roelofs, A., and Meyer, A. S. 1999. A theory of lexical access in speech production. Behav. Brain Sci. 22:1–75. doi: 10.1017/s0140525x99001776

[2] ↑ Christoffels, I. K., Formisano, E., and Schiller, N. O. 2007. Neural correlates of verbal feedback processing: An fMRI study employing overt speech. Hum. Brain Map. 28:868–79. doi: 10.1002/hbm.20315

[3] ↑ Riès, S., Janssen, N., Dufau, S., Alario, F. X., and Burle, B. 2011. General-purpose monitoring during speech production. J. Cogn. Neurosci. 23:1419–36. doi: 10.1162/jocn.2010.21467

[4] ↑ Marshall, R. C., Neuburger, S. I., and Phillips, D. S. 1994. Verbal self-correction and improvement in treated aphasic clients. Aphasiology 8:535–47.

[5] ↑ Riès, S. K., Xie, K., Haaland, K. Y., Dronkers, N. F., and Knight, R. T. 2013. Role of the lateral prefrontal cortex in speech monitoring. Front. Hum. Neurosci. 7:703. doi: 10.3389/fnhum.2013.00703