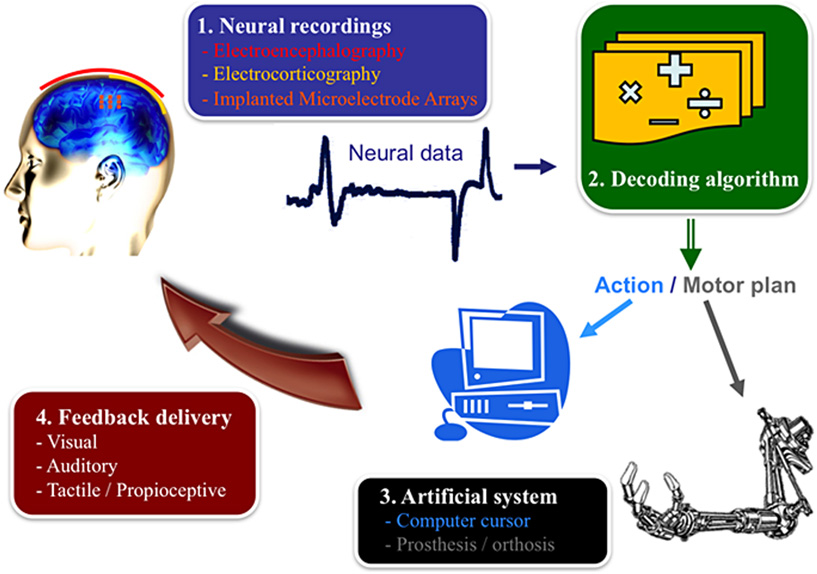

Brain–machine interfaces (BMIs), or brain–computer interfaces, are an exciting multidisciplinary field that has grown tremendously during the last decade. In a nutshell, BMI is about transforming thought into action and sensation into perception. In a BMI system, neural signals recorded from the brain are fed into a decoding algorithm that translates these signals into motor output. This includes controlling a computer cursor, steering a wheelchair, or driving a robotic arm. A closed control loop is typically established by providing the subject with visual feedback of the prosthetic device. BMIs have tremendous potential to greatly improve the quality of life of millions of people suffering from spinal cord injury, stroke, amyotrophic lateral sclerosis, and other severely disabling conditions [1].

An important aspect of a BMI is the capability to distinguish between different patterns of brain activity, each being associated to a particular intention or mental task. Hence, adaptation is a key component of a BMI, because, on the one side, users must learn to control their neural activity so as to generate distinct brain patterns, while, on the other side, machine learning techniques (mathematical ways to pick patterns out of complex data) ought to discover the individual brain patterns characterizing the mental tasks executed by the user. In essence, a BMI is a two-learner system.

Brain–machine interfaces exist at both invasive and non-invasive levels. Invasive techniques require brain surgery to place recording electrodes directly on or in the brain. Examples of the former include BMIs using intra-cortical multi-electrode arrays implanted in the brain, and electrocorticography (ECoG) recordings directly from the exposed surface of the brain. Non-invasive techniques include electroencephalography (EEG) recordings from the scalp – i.e., outside of the skull (Figure 1, box 1). EEG and ECoG techniques measure voltage fluctuations resulting from current flowing within the neurons of the brain. At the cost of being invasive, ECoG signals have better spatial resolution (millimeters!) and signal-to-noise (bigger clear signal) properties than EEG signals, intra-cortical multi-electrode arrays are the most invasive of the three techniques. These electrodes record two different types of signals: the discharge of individual neurons (i.e., spikes), known as single-unit activity (SUA), and the summed synaptic current flowing across the local extracellular space around an implanted electrode, known as the local field potential (LFP).

- Figure 1 - Your brain in action.

- The different components of a Brain–machine interface (BMI) include the recording system, the decoding algorithm, device to be controlled, and the feedback delivered to the user [2].

Researchers working with EEG signals have made it possible for humans with severe motor disabilities to mentally control a variety of devices, from keyboards to wheelchairs (Figure 2). A few severely disabled people currently regularly use an EEG-based BMI for communication purposes. Key limitations of EEG signals are the extensive need for machine learning techniques and the need to combine brain–computer interface (BCI) systems with smart interaction designs and devices. Studies using ECoG signals have demonstrated promising proof of concept for motor neuroprosthetics and for reconstructing speech from human auditory cortex – a fundamental step toward allowing people to speak again by decoding imagined speech.

- Figure 2 - Brain-controlled wheelchair.

- Users can drive these wheelchairs reliably and safely over long periods of time thanks to the incorporation of shared control (or context awareness) techniques. This wheelchair illustrates the future of intelligent neuroprostheses that, as with our spinal cord and musculoskeletal system, works in tandem with motor commands decoded from the user's brain cortex. This relieves users from the need to deliver continuously all the necessary low-level control parameters and, so, reduces their cognitive workload [3].

- Watch the Video

On the intra-cortical recording front (i.e., using electrode arrays to record the activity of single neurons), recent advances have provided a “proof of concept,” showing the theoretical feasibility of building functional real-world BMI systems. In fact, the last decade has flourished with impressive demonstrations of neural control of prosthetic devices by rodents, non-human primates, and humans participating in phase I clinical trials. This progress will greatly accelerate over the next 5–10 years and is expected to lead to a diverse set of clinically viable solutions for different neurological conditions.

These approaches provide complimentary advantages, and a combination of technologies may be necessary in order to achieve the ultimate goal of recovering motor function with the BMI at a level that will allow a patient to effortlessly perform tasks of daily living [4]. Moreover, we will need to combine practical BMI tools with smart interaction designs and devices, to facilitate use over long periods of time and to reduce the cognitive load [5]. Thus, the direction of BMI has turned from “Can such a system ever be built?” to “How do we build reliable, accurate and robust BMI systems that are clinically viable?” This question will require addressing the following key challenges:

The first one is to design physical interfaces that can operate permanently and last a lifetime. New hardware spans from dry EEG electrodes to biocompatible and fully implantable neural interfaces, including ECoG, LFP, and SUA, from multiple brain areas. An essential component of all of them is wireless transmission and ultra low-power consumption. Importantly, this new hardware demands new software solutions. Continuous use of a BMI engenders, by definition, plastic changes in the brain circuitry. This leads to changes in the patterns of neural signals encoding the user's intents. The BMI, and the decoding algorithm in particular, will have to evolve after their deployment. Machine learning techniques, which are advanced mathematical ways to decode signals from the brain, will have to track these transformations in a transparent way while the user operates the brain-controlled device. This mutual adaptation between the user and the BMI is non-trivial.

The second challenge is to decode and integrate in the system, information about the cognitive state of the user that is crucial for volitional interaction. This can include awareness to errors made by the device, anticipation of critical decision points, lapses of attention, and fatigue. This will be critical for reducing the cognitive workload and facilitating long-term operation. Cognitive information must be combined with read-outs of diverse aspects of voluntary motor behavior, from continuous movements to discrete intentions (e.g., types of grasping; onset of movements), to achieve natural, effortless operation of complex prosthetic devices.

The third major challenge is to provide realistic sensory feedback conveying artificial tactile and proprioceptive information, i.e., the awareness of the position and movement of the prosthesis. This type of sensory information has the potential to significantly improve the control of the prosthesis, by allowing the user to feel the environment in cases where natural sensory afferents are compromised, either through other senses or by stimulating the body to recover the lost sensation. While current efforts are mostly focused on broad electrical stimulation of neurons in sensory areas of the brain, new optogenetic approaches (i.e., turning brain cells on and off with light) will allow more selective stimulation of targeted neurons. At a more peripheral level, alternatives are electrical stimulation of peripheral nerves and vibrotactile stimulation at body areas where patients retain somatosensory perception.

Finally, BMI technology holds strong potential as a tool for neuroscience research, as it offers researchers the unique opportunity to directly control the causal relationship between brain activity, sensory input, and behavioral output [6]. Hence, this technology could provide new insights into the neurobiology of action and perception.

References

[1] ↑ Nicolelis, M. A. 2001. Actions from thoughts. Nature 409:403–7. doi:10.1038/35053191

[2] ↑ Héliot, R., and Carmena, J. M. 2010. Brain–machine interfaces. In Encyclopedia of Behavioral Neuroscience, eds. G. F. Koob, M. Le Moal, and R. F. Thompson, 221–5. Oxford: Academic Press.

[3] ↑ 3. Carlson, T. E., and Millán, J. d. R. 2013. Brain-controlled wheelchairs: a robotic architecture. IEEE Robot. Automot. Mag. 20:65–73. doi:10.1109/MRA.2012.2229936

[4] ↑ Millán, J. d. R., and Carmena, J. M. 2010. Invasive or noninvasive: understanding brain–machine interface technology. IEEE Eng. Med. Biol. Mag. 29:16–22. doi:10.1109/MEMB.2009.935475

[5] ↑ Millán, J. d. R., Rupp, R., Müller-Putz, G. R., Murray-Smith, R., Giugliemma, C., Tangermann, M. et al. 2010. Combining brain–computer interfaces and assistive technologies: state-of-the-art and challenges. Front. Neurosci. 4:161. doi:10.3389/fnins.2010.00161

[6] ↑ 6. Carmena, J. M. 2013. Advances in neuroprosthetic learning and control. PLoS Biol. 11:e1001561. doi:10.1371/journal.pbio.1001561